Abstract

AHA is a large scale simulation model under development at the Theoretical Ecology Group, University of Bergen, that implements a general decision-making architecture in evolutionary agents. Each agent is programmed as a whole virtual organism including the genome, rudimentary physiology, the hormonal system, a cognitive architecture and behavioural repertoire. They "live" in a stochastic spatially explicit virtual environment with physical gradients, predators and prey. The primary aim of the whole modelling machinery is to understand the evolution of decision making mechanisms, personality, emotion and behavioural plasticity within a realistic ecological framework.

HEDTOOLS is a set of modelling utilities and tools (implemented as portable Fortran modules, not object-oriented) that have general applicability and are used for data conversion, input/output, random number generation and execution logging. There are also a few computational tools. Even though HEDTOOLS modules are primarily designed to be used in the AHA Model, they are designed such that they can be used in many different simulation projects.

This document describes HEDTOOLS and outlines the software requirements and general coding style for the AHA model.

References

-

The AHA Model web site is https://ahamodel.uib.no

-

Budaev, S., Jorgensen, C., Mangel, M., Eliassen, S., & Giske, J. (2019). Decision-making from the animal perspective: Bridging ecology and subjective cognition. Frontiers in Ecology and Evolution, 7, 164. doi:10.3389/fevo.2019.00164

-

Budaev, S., Giske, J., & Eliassen, S. (2018). AHA: A general cognitive architecture for Darwinian agents. Biologically Inspired Cognitive Architectures, 25, 51–57. doi:10.1016/j.bica.2018.07.009

Document date: 2023-06-18. Latest PDF version: https://ahamodel.uib.no/doc/HEDTOOLS.pdf

The document is generated with the AsciiDoc markup processor.

Colophon

The AHA Model development is supported by the University of Bergen and the Research Council of Norway grant FRIMEDBIO 239834.

AHA Model is also a partner project of the Digital Life Norway.

1. Software tools and requirements

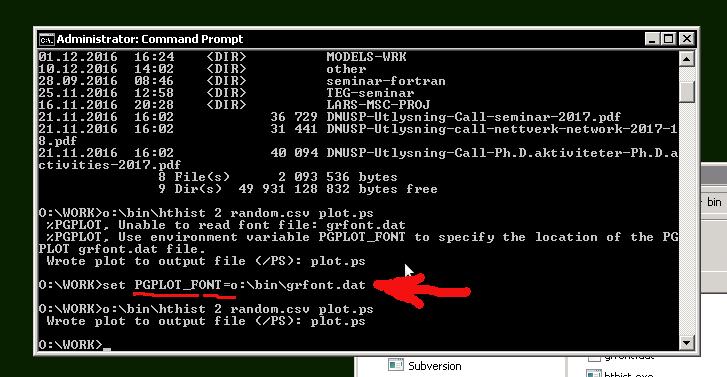

Most tools needed for the model are already available on Linux (e.g. gfortran, make, Subversion, console, midnight commander etc) and are trivial to install using the standard package manager (e.g. apt-get install gfortran on Ubuntu). On Windows they can be installed manually from their official web sites. On Mac use homebrew to install many of the utils. Below are some details on the Windows software.

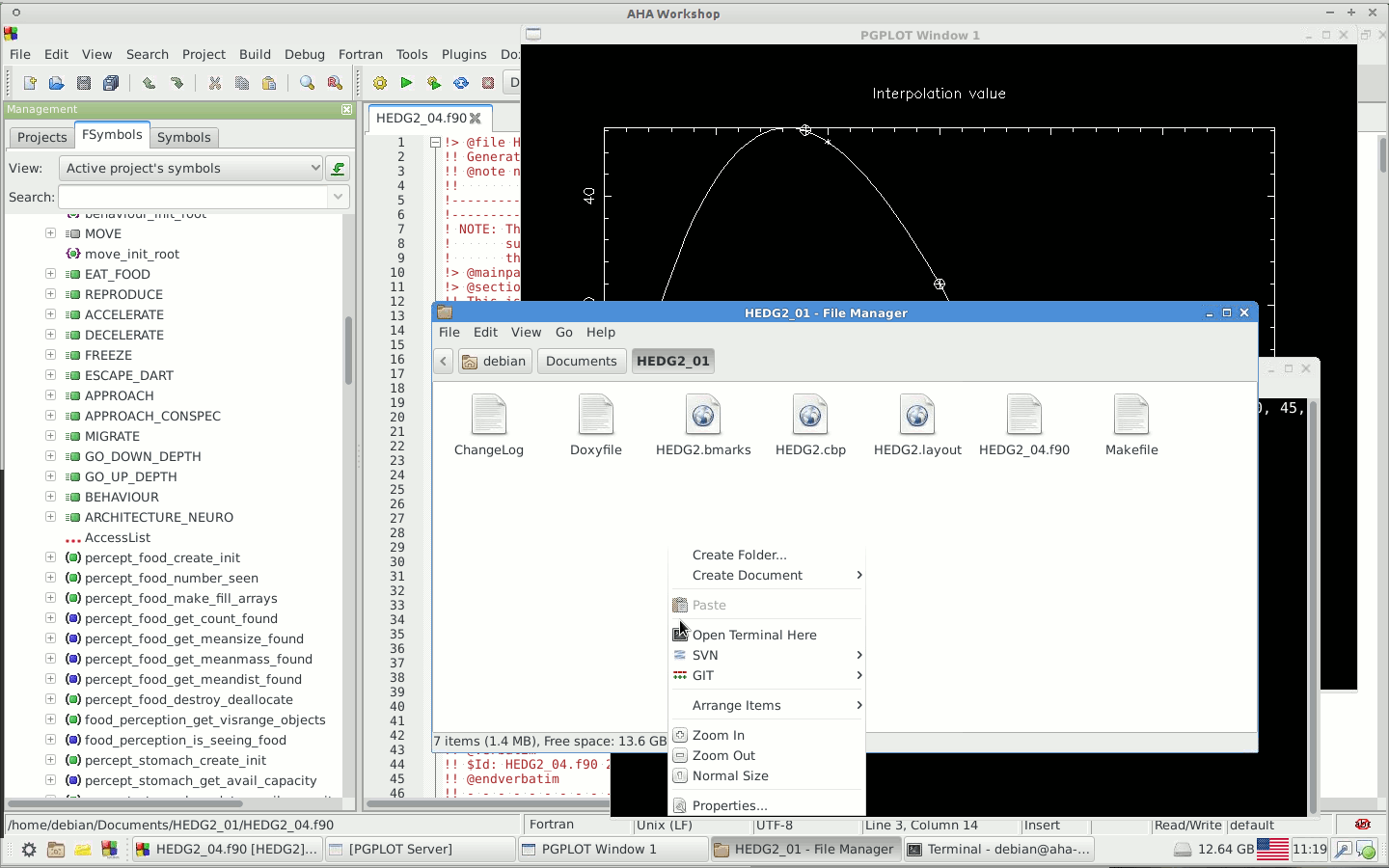

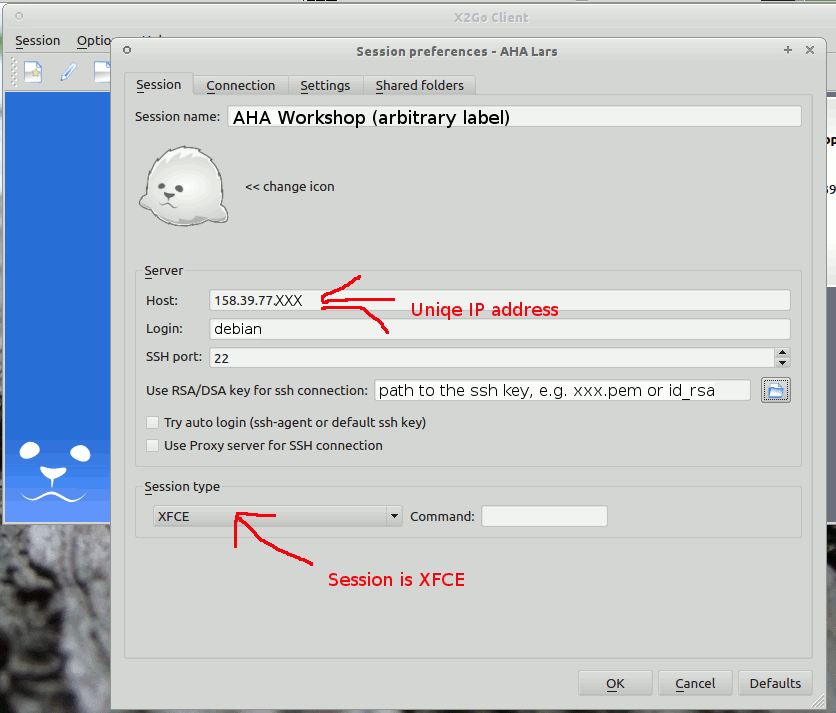

The UiB IaaS-based virtual cloud desktop includes all the essential software components for working with and developing the model.

-

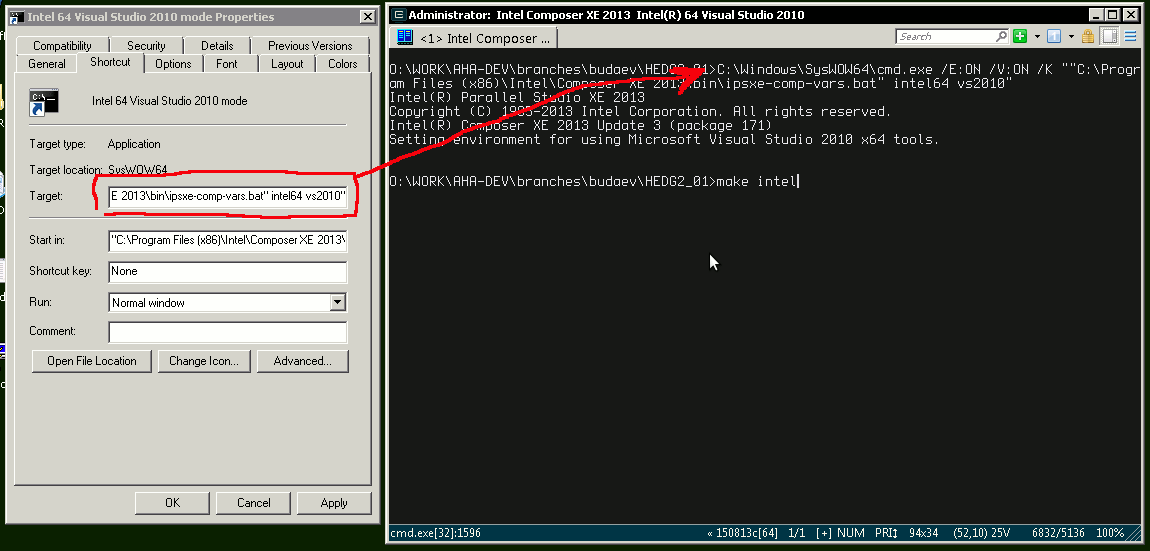

Fortran Compiler (Mandatory)

Intel Fortran compiler, a commercial software available at UiB. Intel Fortran is also installed on the UiB HPC cluster fimm. Free GNU Fortran distribution along with make and other tools is available from the Equation solution http://www.equation.com/servlet/equation.cmd?fa=fortran. There is also Oracle Solaris Studio combining Fortran compiler and an NetBeans-based IDE, freely available from http://www.oracle.com/technetwork/server-storage/solarisstudio, Linux and Solaris OSs only (no Windows or Mac). An extensive set of documentation for GNU gfortran can be found here: https://gcc.gnu.org/onlinedocs/gfortran

-

GNU Make and utils (Mandatory)

This is an automated program build system that keeps track of changes in different components of the source code and generates header files automatically depending on the platform and compiler used. It is possible to work without it, but in such a case everything should be tweaked manually. GNU make is trivial to install on Linux. For Windows, it goes bundled with the Equation solution GNU Fortran.

If GNU Fortran is not needed, executable make can be downloaded separately from the Equation solution web site. It is also available from the Cygwin system and other GNU core utils distros for Windows.

|

|

Two GNU core utilities are mandatory to use the GNU make system: grep, expr, cut, sed and awk They can be installed from any of the GNU distros for Windows. |

The official site of GNU make with the code, manuals etc. is here: https://www.gnu.org/software/make/. An almost complete GNU distribution for Windows is here: http://www.cygwin.com/.

-

Subversion (Mandatory)

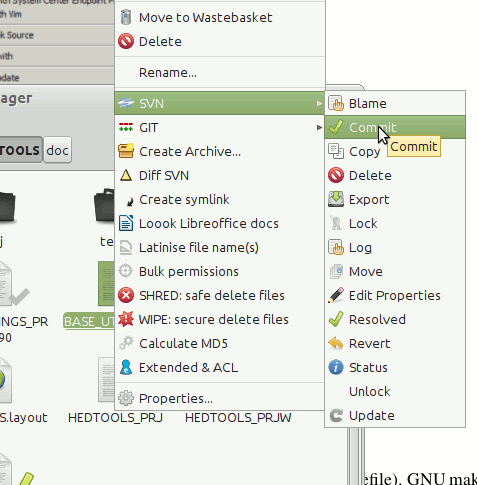

Subversion is a version control system. Windows GUI (graphical user interface) for Subversion is TortoiseSVN (supported by UiB IT): https://tortoisesvn.net/. It is very helpful to have also console Subversion client software: TortoiseSVN includes console tools but they are not installed by default. A good command-line-only Windows tool is SilkSVN https://sliksvn.com/download/. There are many other GUI tools, e.g. PySVN-Workbench and TkCVS that are open source tools available for Windows, Linux and Mac. SmartSVN is a commercial (with free edition) Java-based multi-platform GUI client (http://www.smartsvn.com). There is also SmartGit multiplatform Java-based GUI client that integrates Subversion with Git and Mercurial (other types of version control software), see here: https://www.syntevo.com/smartgit/. Subversion can be integrated with text editors, IDEs and other tools using various third party plugins.

-

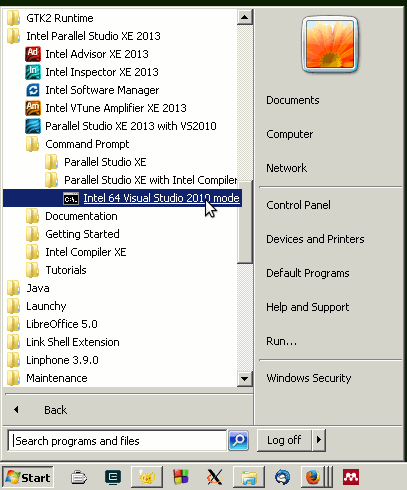

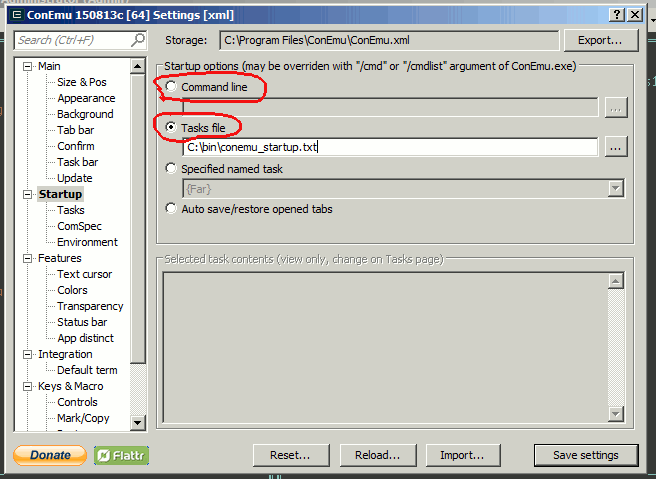

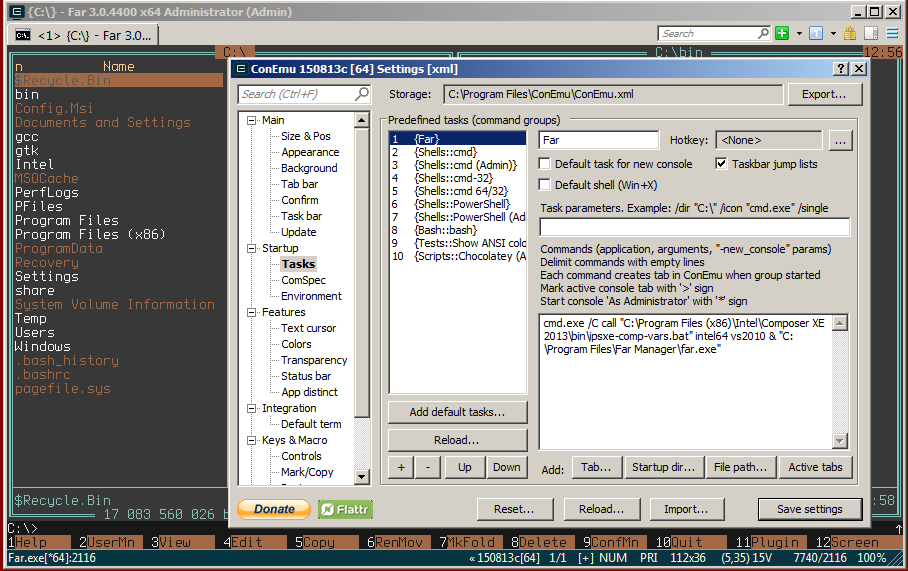

Console terminal (Highly recommended)

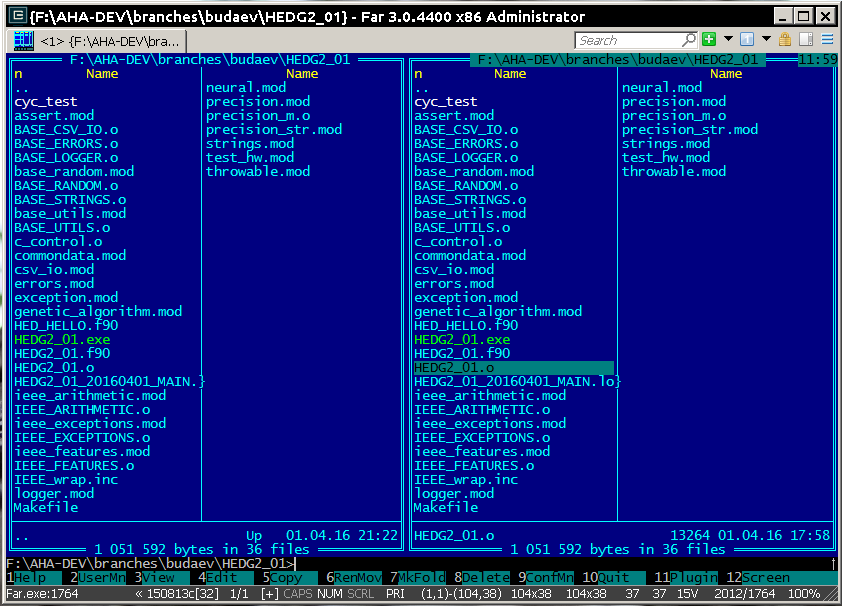

The Windows console (cmd) is extremely weak. Conemu https://conemu.github.io/ is a much better alternative, especially with the Far manager, a two-panel console file manager similar to the ancient Norton commander for DOS (or Midnight commander on Linux): http://www.farmanager.com/download.php?l=en.

It is also very helpful to have (on the Microsoft Windows) the GNU core utilities (grep, cut, sed, awk etc.). Some of them are used in the GNU make build system, and the bare minimum set is included with the Equation solutions gfortran. However, mandatory grep and expr are not there should be installed separately.

There are several distributions available, e.g. GnuWin32, Cygwin, MinGW, busybox-w32, ezwinports, UnxUtils, Gow, winbash. Among these available distros, Cygwin is the most complete and available for both 32 and 64 bit Windows platforms. It is recommended for installation.

-

Doxygen: Automatically generate program documentation (Highly Recommended)

This is a tool for writing software reference documentation. The documentation is written within code using special markup and can include formatting, formulas, tables, graphics etc. Doxygen can cross reference documentation and code, so that the user/reader of a document can easily refer to the actual code. It is trivial to install on Linux, but probably not so on Windows. Using the full power of the tool is not trivial though. Available from http://doxygen.org/. On Windows is is also highly desirable to have a LaTeX distribution, such as MikTeX (http://miktex.org) and Ghostscript (http://www.ghostscript.com), both are free software. LaTeX and Ghostscript are required to generate PDF.

-

Asciidoc: Markup text processor (Recommended)

Asciidoc is a markup text processor. This manual is written in asciidoc, but it is not used for anything except compiling a PDF version of this document. Asciidoc is trivial to install on Linux (check your package manager), but requires more efforts (due to many dependencies e.g. python, LaTeX etc.) on Windows. Check out asciidoc web site: http://asciidoc.org/ (or http://www.methods.co.nz/asciidoc/).

-

Geany (Recommended)

Lightweight IDE, Editor for code and any text files (including AsciiDoc). Works on Linux, Windows and Mac. http://www.geany.org/ Also need plugins: http://plugins.geany.org/ (The Geany SVN plugin for Windows requires command line tools like SilkSvn to work.)

-

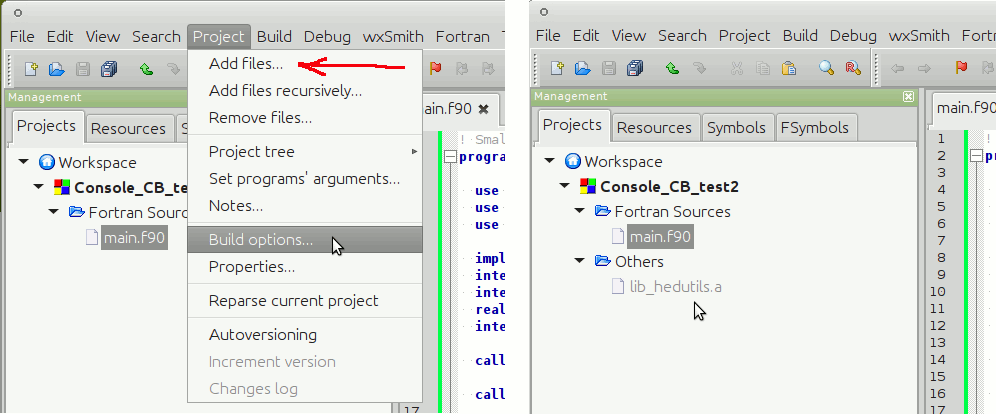

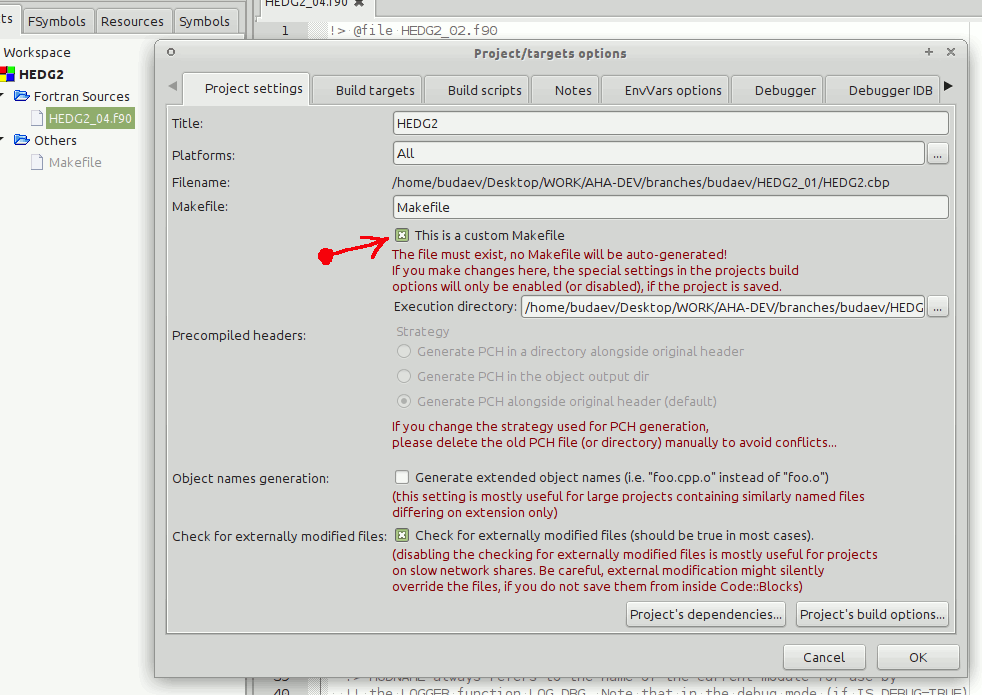

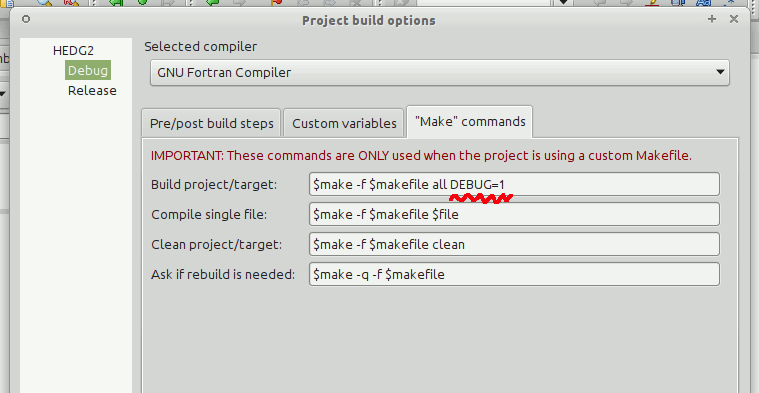

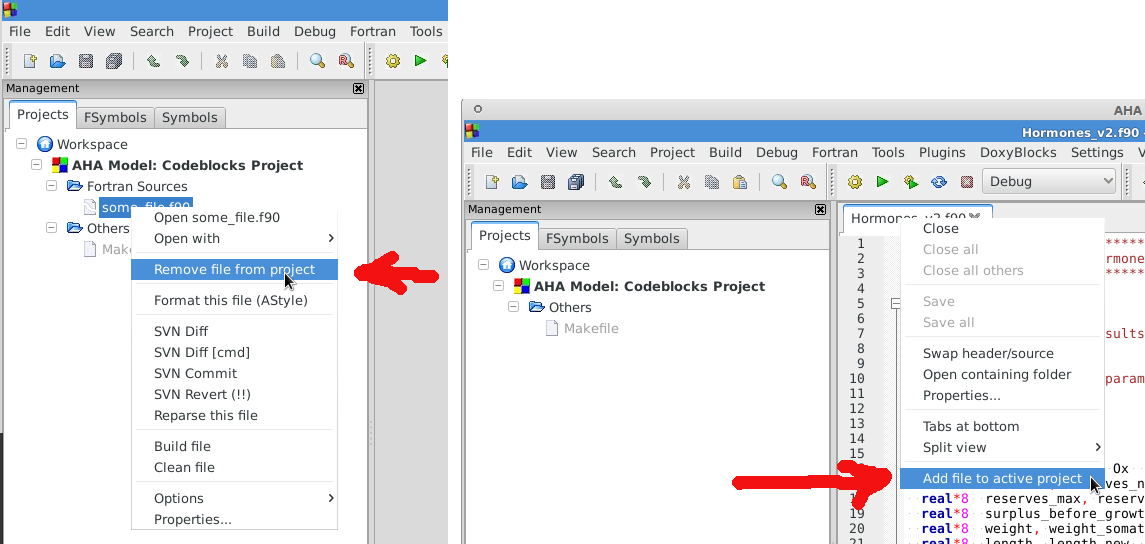

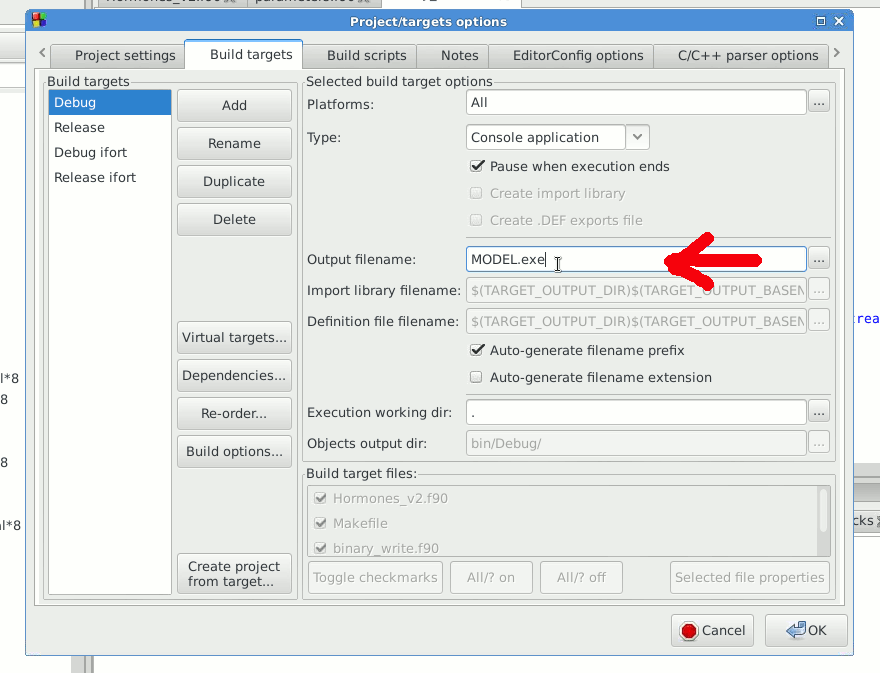

Code::Blocks for Fortran (Recommended)

IDE for Fortran. Works with many compilers, including Intel and GNU gfortran. http://cbfortran.sourceforge.net/. Installation by unpacking into some directory (i.e. does not require administrative rights). How to use this program for building for the AHA model is described below.

-

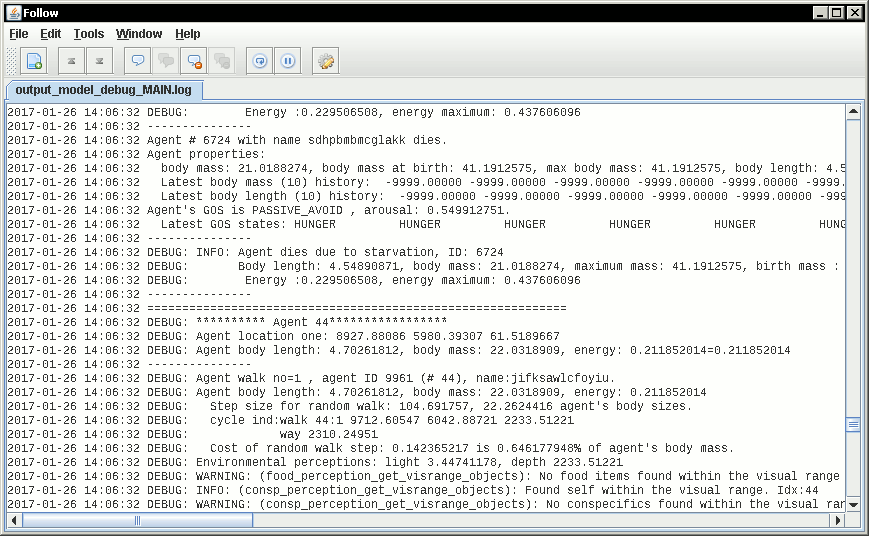

Follow: A logfile reading program (Optional)

Following a logfile while executing a program is done trivially on Linux: tail -f some_log_file.txt. There is a Java GUI program for reading log files that works on all major platforms installs by just placing in some directory: Follow. Available from http://sourceforge.net/projects/follow/.

2. Coding style: General guidelines and tips

2.1. General design

Simulation modelling differs from normal business and scientific programming. Development of a normal computer program is usually cumulative and incremental. The same code base is developed, updated, changed, maintained etc. The program as a whole remains basically the same. For example common office software like Microsoft Office or LibreOffice has been under constant cumulative development for decades.

A single program with a specific code base is developed during a long life cycle. The same program is intended to be distributed over many users (clients) and runs numerous times. The computer program itself is the main aim and the result (outcome).

It is not a problem to design the program around the single "main program" that is enclosed within program … end program statements with addition of various subroutines, functions and external modules.

program monumental_program

...

! main code goes here...

...

contains ! internal procedures

subroutine component()

...

end subroutine component

function math()

...

end function math

end program monumental_program

! external procedures

subroutine ext_component()

...

end subroutine ext_component

function ext_math()

...

end function ext_math

Modelling software, however, is very different. The program code is not the main aim and outcome of the work, but just a tool to understand some natural phenomena. In a sense, modelling code is quite ephemeral and changes frequently to model different things. The final program does not live for a long time but scrapped after a few runs (e.g. a series of computational experiments) and then significantly modified or just scrapped.

This requires programming techniques that would facilitate developing the computer program from reusable components. In a sense, building a new model should be like building short-lived toys from Lego bricks.

In spite of short life cycle, modelling code should be always archived to ensure accountability and reproducibility. This means that any specific version of the model (even whan "scrapped") should be always easily available for further work (running, rerunning, experimentation, modification to branch a new model etc).

The following design principles enable such a dynamic workflow:

-

Modularity — the program should be composed of many small "atomic" components, each doing a single thing rather than a big monolythic pieces. One subroutine or function should do only one thing, their size should usually fit on a single or at least two text editor (IDE) screens.

-

Headless design — there should be no or a very minimal main program, all the code should go to the modules (subroutines and functions). Ideally, the main program must look like this:

program model

use module_1

use module_2

...

use module_x

call do_model() ! the only one instruction to do all the work

end program

-

Reusability — each of the small pieces should be easily reused in a different programming project or model. Global parameters or variables(e.g. common block or module constants) should be kept to a minimum or avoided. Logically similar and consistent sets of procedures and functions must be collected into coherent programming units (modules) that can be easily plugged-in to a new project (e.g. by use module_x).

-

Documentation — The code and especially interfaces (parameters passed to the subroutines and functions) must be thoroughly documented. Documentation must always be consistent with the code. If the logic of the algorithm is changed, this must be reflected in the text description. The code is the work of science rather than just "coding" for the machine and must be primarily directed to be read by human beings.

-

Tests — The reusable modular code should include specific procedures for testing itself. For example a specialised set of subroutines for checking the calculations against predefined "true" results. This would ensure that changing something in the code does not break other pieces. Testing code should be checked and updated with the algorithm change.

One important emergent effect of such a programming workflow is that a framework of reusable components is created automatically without much special dedicated effort (but creating a programming framework/library is otherwise a costly task!). It is also very easy to test all individual components (e.g. via a separate main testing program). Thus, writing code for a specific model saves lots of effort for subsequent modelling work.

In contrast, writing monolythic code, especially in the "main program," leads to large extra work: every model is developed almost fully from scratch, resulting in huge waste of time and effort. Monolythic code is also more difficult to understand and test, it is prone to errors that are difficult to find and fix.

2.2. Code formatting rules

To get an easier and more efficient work with the code, it is good to follow universal rules in code formatting consistently.

|

|

Here are some links to Fortran programming style: Coding tips and Fortran style. |

-

Line length should be short, not exceeding 80 characters. Use the ampersand symbol & to wrap lines. Too long lines may not work on some compilers by default and do a lot of mess when you code on the terminal or have to check diff.

call CSV_MATRIX_WRITE ( reshape( &

[proto_parents%individual%body_length, &

proto_parents%individual%body_mass, &

proto_parents%individual%stomach_content_mass, &

proto_parents%individual%thyroid_level, &

proto_parents%individual%smr, &

proto_parents%individual%energy_current], &

[POPSIZE, 6]), &

"out_" // MODEL_NAME // "_" // TAG_MMDD() // &

"_gen_" // TOSTR(realgen, GENERATIONS) // csv, &

["LEN ","MASS", "STOM", "THYR","SMR ","ENRG"] &

)

........

!> Log generation timing

call LOG_MSG ("Generation " // TOSTR(realgen) // ", took " // &

TOSTR(stopwatch_generation%elapsed(),"(f8.4)") // &

" s since generation start")

-

Use lowercase for most of the coding. Specifically, fortran keywords, intrinsic functions etc. as well as normal variables should be in lowercase. Global and local parameters that are not allowed to change, in UPPERCASE (so they become easily identifiable). For example:

!> Genotype to phenotype gamma2gene initialisation value for **thyroid**

real, parameter, public :: THYROID_INIT = 0.5

....

call this%hormone_init(this%thyroid_level, THYROID_GENOTYPE_PHENOTYPE, THYROID_INIT)

-

Global variables that are defined in the upstream module but are not fixed parameters and so can change their value are in the "Camel Case" or, when very short, in UPPERCASE.

!> MMDD tag, year, month and day, used in file names and outputs.

character(LABEL_LENGTH), public :: MMDD

!> The current time step of the model. This is the global non-fixed-parameter

!! variable that is used and updated downstream in the subroutines.

integer, public :: Time_Step_Model_Current

-

External and library procedures that are not part of the Fortran intrinsic set and not part of the current model code should be in UPPERCASE. So they are easy to identify. Spherically, modelling tools functions and subroutines from the HEDTOOLS bundle should be in UPPERCASE, e.g.

! LOG_MSG and TOSTR are external procedures

call LOG_MSG ("Generation :" // TOSTR(realgen))

-

Global class names and all the derived classes are in UPPERCASE, so they are easy to identify within the code, e.g.

!> This type adds hormonal architecture extending the genome object

type, public, extends(INDIVIDUAL_GENOME) :: HORMONES

-

Block labels for particularly long or important pieces of the code are in UPPERCASE, so they are easy to identify, e.g.

ENVIRON_RESTRICT: if (present(environment_limits)) then ! Block label UPPERCASE

do while (.NOT. test_object%is_within(environment_limits))

call test_object%position( SPATIAL(current_pos%x + delta_shift(), &

current_pos%y + delta_shift(), &

current_pos%depth + delta_shift()) )

....

-

Always explicitly use the intrinsic type conversion functions, whenever conversion between types is necessary — even if automatic implicit conversion works correctly. This will avoid many bugs.

if ( ((real(sex_locus_sum,SRP)/real(sex_locus_num,SRP)) / &

(ALLELERANGE_MAX - ALLELERANGE_MIN)) <= SEX_RATIO ) then

-

Always use the result-style functions (i.e, with a result variable). This makes it easier to control the function type and avoid bugs.

elemental function alleleconv(raw_value) result (converted)

......

!> Type 1: no conversion from 0:1 to output allele value

!! @note identical to old alleleconv 1

!! `converted = raw_value`

converted = raw_value

end function alleleconv

-

Always explicitly set the intent of all parameters in any procedure. There should be no parameter without explicit intent. This helps avoid bugs and makes it much easier to convert procedures to pure and elemental.

-

Declare procedures pure or elemental whenever possible. There is a huge advantage of using elemental procedures as they transparently work with arrays and can be automatically parallelized by the compiler too!

elemental function carea(R) result (area_circ) ! Declare elemental

real(SRP), intent(in) :: R ! Set intent even in

real(SRP) :: area_circ ! the simplest cases.

area_circ = PI * R * R

end function carea

2.3. Efficient Fortran programming

|

|

A very helpful collection of advises and tips for efficient programming in Fortran can be found here: Fortran Best Practices |

-

Avoid using very long lines of code. They are difficult to read, especially if you (or your collaborator) use terminal editor limited by a 80 columns terminal. Working on the HPC cluster is always via the terminal. Also, compilers often do not like very long lines and may drop extra characters (resulting in compile errors). For example 132 characters is a standard limitation. But the default rules may be different on different compilers and platforms. Best try to use code lines limited by 80 characters — many editors have options to show a 80-characters limit line at the right.

|

|

In GNU gfortran compiler, -ffree-line-length-N flag controls how many characters (N) are allowed in a single line of code. The default valus is 132. none removes any limnt, so the whole line is used: gfortran -ffree-line-length-none code.f90. |

-

Use the ampersand & line continuation symbol and indents to format code showing its structure for easy reading.

-

Avoid non-standard and non-portable Fortran constructions that work on some compilers but not in others. Intel Fortran compiler can be especially notorious in implementing such constructs. Refer to the Fortran standard: Adams, J.C et al., 2009. The Fortran 2003 Handbook. Springer, DOI: 10.1007/978-1-84628-746-6.

-

Work at high level, use these tools, use objects, isolate as much as possible into subroutines In this way of coding, it becomes more clear what each part of the program is really doing and it is also easier to modify components of the program so that they don’t affect other irrelevant components.

GENERATIONS_LOOP: do while &

(realgen <= GENERATIONS .and. &

parents(1)%fitness > 0)

call sort_by_fitness()

call selection()

call mate_reproduce()

call offspring_fitness()

call generations_swap()

realgen = realgen +1

end do GENERATIONS_LOOP

-

Modularise: many small subroutines are easier to code, test, understand, reuse, and maintain that a single monolithic piece or very few general subroutines. Modularity can also involve hierarchical organisation, it is sometimes quite useful, when a limited scope is required, to define subroutines within subroutines (the keyword contains can be used within other subroutines and functions!):

! This is the main module

module THE_GENOME

use COMMONDATA

implicit none

.....

.....

contains

! It contains this subroutine...

subroutine chromosome_sort_rank_id(this)

class(CHROMOSOME) :: this

.....

call qsort(this%allele)

.....

contains

! And the above subroutine contains two further subroutines

recursive subroutine qsort(A)

.....

.....

end subroutine qsort

subroutine qs_partition_rank_id(A, marker)

.....

.....

end subroutine qs_partition_rank_id

end subroutine chromosome_sort_rank_id

end module THE_GENOME

-

Use short procedures rather than long ones. A single subroutine/function should ideally occupy not more than a single screen page (with vertical screen orientation). So the whole bunch of code is easy to overview and work with. Short procedures are particularly helpful in the object oriented code.

!> Calculate surface light at specific time step of the model.

!! Light (surlig) is calculated from a sine function. Light intensity

!! just beneath the surface is modeled by assuming a 50 % loss by scattering

!! at the surface: @f$ L_{t} = L_{max} 0.5 sin(\pi dt / \Omega ) @f$.

elemental function surface_light(tstep) result (surlig)

!> @returns surface light intensity

real(SRP) :: surlig

!> @param tstep time step of the model, limited by maximum LIFESPAN

integer, intent(in) :: tstep

surlig = DAYLIGHT*0.5_SRP*(1.01_SRP+sin(PI*2._SRP* &

DIELCYCLES*real(tstep,SRP)/(1._SRP*LIFESPAN)))

end function surface_light

-

Use meaningful labels. Global variable names should have longer names, sometimes even written in full, separate words with underscore _, e.g. some_global_variable so that Emacs, Vim and other advanced programming editors could make use of the words (i.e. SomeGlobalVariable is much less useful). Global names must therefore comment themselves, abbreviations should be very limited to the most obvious cases (e.g. fry_length is much better than FLEN). Local variables can have shorter names though, because they are used in limited contexts.

Also, using labels to mark do.. end do, if .. end if, forall and other similar constructs may greatly improve the readability of the code and make it more easy to understand, especially if there are many nested loops if..then.. end if constructs. No need to label all such things (this will just increase clutter), but those that are really important or very big must be. A couple of examples are below:

GENERATIONS_LOOP: do while &

(realgen <= GENERATIONS .and. &

parents(1)%fitness > 0)

.....

realgen = realgen + 1

... exit GENERATIONS_LOOP ! it is now clear which loop to "exit"

...

... cycle GENERATIONS_LOOP ! and clear which loop to "cycle"

! if there are several nested loops...

end do GENERATIONS_LOOP

SELECT_DEVIANT_CLASS: if (dev == 2) then

.....

else if (dev == 3) then SELECT_DEVIANT_CLASS

.....

else if (dev == 4) then SELECT_DEVIANT_CLASS

......

end if SELECT_DEVIANT_CLASS

-

Use whole-array operations and array slices instead of loops, prefer built-in loop-free and parallel instructions and array assignments (where, forall etc.): it is faster. Fortran 95, 2003 and 2008 has several looping/array assignment constructions that have been optimised for speed in multi-processor parallel environments. Never use loops to initialise arrays, and avoid using them to calculate array components. Whenever possible, reverse the order of indices in nested loops, e.g. first looping should be over the columns, and then over the rows. Nested loops may have huge speed overhead! Use FORALL and WHERE for "parallelized" array assignments. Below is a little test conducted on an average amd64 system using GNU Fortran (-O3 -funroll-loops -fforce-addr, timing is by Linux time).

! *** Test 1: Multiple nested loops, execution time = 0m12.488s

use BASE_UTILS

use BASE_RANDOM

implicit none

integer, parameter :: n=1000, a=100,b=100,c=100

integer :: nn, i,j,k

real :: random_r

real, dimension(a,b,c) :: M ! The above header part is the same in all tests

call random_seed_init

MATRLOOP: do nn=1,n

random_r = rand_r4()

do i=1,a ! Multiple nested loops

do j=1,b

do k=1,c

M(i,j,k) = random_r

end do

end do

end do

end do MATRLOOP

! *** Test 2: Direct array assignment, execution time = 0m1.046s

! header the same as above...

call random_seed_init

MATRLOOP: do nn=1,n

random_r = rand_r4()

M=random_r ! Direct array assignment

end do MATRLOOP

! *** Test 3: +forall+ instruction, execution time = 0m1.042s

! header the same as above...

call random_seed_init

MATRLOOP: do nn=1,n

random_r = rand_r4()

forall (i=1:a, j=1:b, k=1:c) M(i,j,k) = random_r ! Parallelised assignment

end do MATRLOOP

! *** Test 4: Reverse order of nested loops (cols then rows), execution time = 0m1.046s

! header the same as above...

call random_seed_init

MATRLOOP: do nn=1,n

random_r = RAND_R4()

do i=1,a

do j=1,b

do k=1,c

M(k,j,i) = random_r ! Order of looping is reversed

end do

end do

end do

end do MATRLOOP

Multiple nested loops with the most "natural and intuitive"

indices order (rows then cols) had a really huge execution

speed overhead

[This is because allocation of arrays in

the computer memory goes in an "index-reverse" order in Fortran, see

http://www.fortran90.org/src/best-practices.html#multidimensional-arrays]

,

more than ten times slower than the other methods (compare 12.5s and

1.0s!). The code is also more concise and easier to read. The same tests

with Oracle Solaris Fortran (f95) turning on aggressive optimization

and automatic loop parallelization (-fast -autopar -depend=yes) run much

faster, but the speed differences still remained quite impressive (first

test execution time = 0m0.010s, all other = 0m0.006s). So compiler-side

aggressive CPU optimisation does work, although the tricks remain very useful.

Fortran has many built-in functions that work on whole arrays and these would be faster than multiple nested loops coded manually. For example, many arithmetic functions (abs, … cos,… log, … sin… ) work with arrays as well as scalars. These are also useful: where, forall, as well as array logical operators with mask: all, any, count, maxloc, minloc, maxval, minval, merge, pack, unpack, product, sum. The code below illustrates some loop-free constructions:

!-------------------------------------------------------------------------------

! This program illustrates some loop-free Fortran constructions.

! Note that the order of indices here is: (column, row).

!-------------------------------------------------------------------------------

program LOOP_FREE

! Declare arrays and variables we need

implicit none

character(len=*), parameter :: fmt_str_r = "(3F8.1)" ! these are just for

character(len=*), parameter :: fmt_str_i = "(3I8)" ! output formatting

! Assign 2-D array values from a 1-D vector using 'reshape'

real, dimension(3,4) :: A = reshape( [ 1.1 , 2.1 , 3.1 ,&

1.2 , 2.2 , 3.2 ,&

1.3 , 2.3 , 3.3 ,&

1.4 , 2.4 , 3.4 ] , [ 3 , 4 ] )

integer, dimension(3,4) :: B = 0

integer, dimension(3) :: S = 0

logical, dimension(3) :: AB = F ! logical, can be either .TRUE. of .FALSE.

!-----------------------------------------------------------------------------

! Print original arrays

print (fmt_str_r), A(:,1) ! 1.1 2.1 3.1

print (fmt_str_r), A(:,2) ! 1.2 2.2 3.2

print (fmt_str_r), A(:,3) ! 1.3 2.3 3.3

print (fmt_str_r), A(:,4) ! 1.4 2.4 3.4

! *** Example 1: Assign values based on logical condition in 'where'

where( A > 3. ) ! 'where' clearly produces much simpler and

A=100. ! more concise code than two nested loops,

elsewhere ! it is also easier for the compiler to optimise

B=10 ! and therefore result in faster machine code.

end where

! Here is the result of this array operation:

print *, "---------------------------"

print (fmt_str_r), A(:,1) ! 1.1 2.1 100.0

print (fmt_str_r), A(:,2) ! 1.2 2.2 100.0

print (fmt_str_r), A(:,3) ! 1.3 2.3 100.0

print (fmt_str_r), A(:,4) ! 1.4 2.4 100.0

print *, "---------------------------"

print (fmt_str_i), B(:,1) ! 10 10 0

print (fmt_str_i), B(:,2) ! 10 10 0

print (fmt_str_i), B(:,3) ! 10 10 0

print (fmt_str_i), B(:,4) ! 10 10 0

! *** Example 2: Calculate sums of elements for the second (= cols) dimension of A

S = sum(A, dim=2)

print *, "---------------------------"

print (fmt_str_i), S ! 5 9 400

! *** Example 3: Find if the condition holds, for all values over the second (rows)

! dimension, similar function 'any' evaluates for any of these values.

AB = all(A > B, dim=2) ! Here we output values .TRUE. as T or .FALSE. as F

print *, AB ! F F T

end program LOOP_FREE

Note that newer versions of Fortran compilers can become smart enough to adjust the order of looping in the machine code. Nonetheless it is better to write "optimised" code, preferably not requiring hand-optimisation of the looping order, such as loop-free array constructions, that works fast just everywhere. Many of the Fortran loop-free constructions actually resemble similar Matlab functions.

-

Use parallel processing constructions. The latest F2008 standard includes specific language constructs that enable parallel processing in a standard and portable way: do concurrent and coarray Fortran. This is an example of a parallel looping construction implementing do concurrent:

do concurrent (i=1:ADDITIVE_COMPS)

d1 = ( perception / alleleconv( allelescale(gh(i)) ) &

) ** alleleconv( allelescale(gs(i)) )

neuronal_response = neuronal_response + d1/(1._SRP+d1)

end do

On systems and compilers that do not yet support automatic parallel processing, this is equivalent to the standard do-loop. Note that parallel processing capability should be invoked in the compiler. For Intel Fortran it is -parallel (Linux) or /Qparallel (Windows) compiler options.

2.4. Using strings

-

Always use assumed length strings defined as an asterisk length in subroutine and function dummy input parameters (intent(in)) rather than fixed length parameters. The latter may result in a "Character length argument mismatch" compiler error (or warning) if the function is, for example, called with literal string that does not have exactly the same length as in the definition.

That is, use such definition of the label parameter (assumed length):

subroutine allele_label_set(this, label)

class(GENE) :: this

character(len=*) :: label ! assumed length string, use this!

this%allele_label = label

end subroutine allele_label_set

Rather than this one (length fixed to LABEL_LENGTH characters):

subroutine allele_label_set(this, label)

class(GENE) :: this

character(len=LABEL_LENGTH) :: label

this%allele_label = label

end subroutine allele_label_set

In the former case, such code is safe even when "SEX_DETERMINATION" length (17) is unequal to LABEL_LENGTH:

some_allele%allele_label_set("SEX_DETERMINATION")

3. Document code as you write it with Doxygen

Doxygen is a very useful tool which allows to extract and produce documentation from the source code in a highly structured manner. Prior to parsing the code to get the documentation, one has to provide a configuration file for Doxygen. The doxywizard generates a wizard-like GUI to make this configuration file easily. There are many formatting symbols, Markdown codes are supported. Thus, it is easy to document the code extensively as it is being written.

Comments that are parsed through Doxygen are inserted into the source code using special markup language. The basic usage is quite simple. You should start comment line with "!>" rather than just "!", continuing Doxygen comments is done with two exclamation marks: "!!". Only comments formatted with this style are processed with Doxygen, you are free to insert "usual" comments, they are just ignored by the documentation generator.

The documentation description for a particular unit of the program, e.g. module, subroutine, function or variable definition, should normally go before this unit. Here is an example:

!-------------------------------------------------------------------------------

!> @brief Module **COMMONDATA** is used for definine various global

!! parameters like model name, tags, population size etc.

!! @details Everything that has global scope and should be passed to many

!! subroutines/functions, should be defined in `COMMONDATA`.

!! It is also safe to include public keyword to declarations.

!! `COMMONDATA` may also include subroutines/functions that have

!! general scope and used by many other modules of the model.

module COMMONDATA

......

!> MODNAME always refers to the name of the current module for use by

!! the LOGGER function LOG_DBG. Note that in the debug mode (if IS_DEBUG=TRUE)

!! LOGGER should normally produce additional messages that are helpful for

!! debuging and locating possible sources of errors.

!! Each procedure should also have a similar private constant PROCNAME.

character (len=*), parameter, private :: MODNAME = "COMMONDATA"

!> This is the target string, only for the prototype test

character(len=*), parameter, public :: GA_TARGET = "This is a test of genetic algorithm."

!> Model name for tags, file names etc. Must be very short.

character (len=*), parameter, public :: MODEL_NAME = "HEDG2_01"

There are various options and keywords. A few of them should be particularly useful in documenting the model(s) codes:

@param describes a function or subroutine parameter, may optionally include [in] (or out or in,out) specifier. An example is below

subroutine LOG_DBG(message_string, procname, modname)

implicit none

! Calling parameters:

!> @param[in] message_string String text for the log message

character (len=*), intent(in) :: message_string

!> @param[in] procname Optional procedre name for debug messages

character (len=*), optional, intent(in) :: procname

@returns describes a function return value. @retval is almost the same but starts with the function return value.

function TAG_MMDD() result (MMDD)

implicit none

!> @retval MMDD Returns an 8-character string for YYYYMMDD

character(8) MMDD

@brief starts a paragraph that serves as a brief description. @details starts the detailed description.

!-----------------------------------------------------------------------------

!> @brief LOG_DBG - debug message to the log

!! @details **PURPOSE:** This subroutine is a wrapper for writing debug

!! messages by the module `LOGGER`. The debug message message

!! defined by the `message_string` parameter is issued only

!! when the model runs in the debug mode, i.e. if `IS_DEBUG=.TRUE.`

subroutine LOG_DBG(message_string, procname, modname)

implicit none

@note insert a note with special emphasis in the doc text. @par start a new paragraph optionally with a title in parentheses. In the example above note also the use of Markdown formatting, such as double asterisks (*) for strong emphasis (bold) and reverse quote (`) for inline code (variable names etc.).

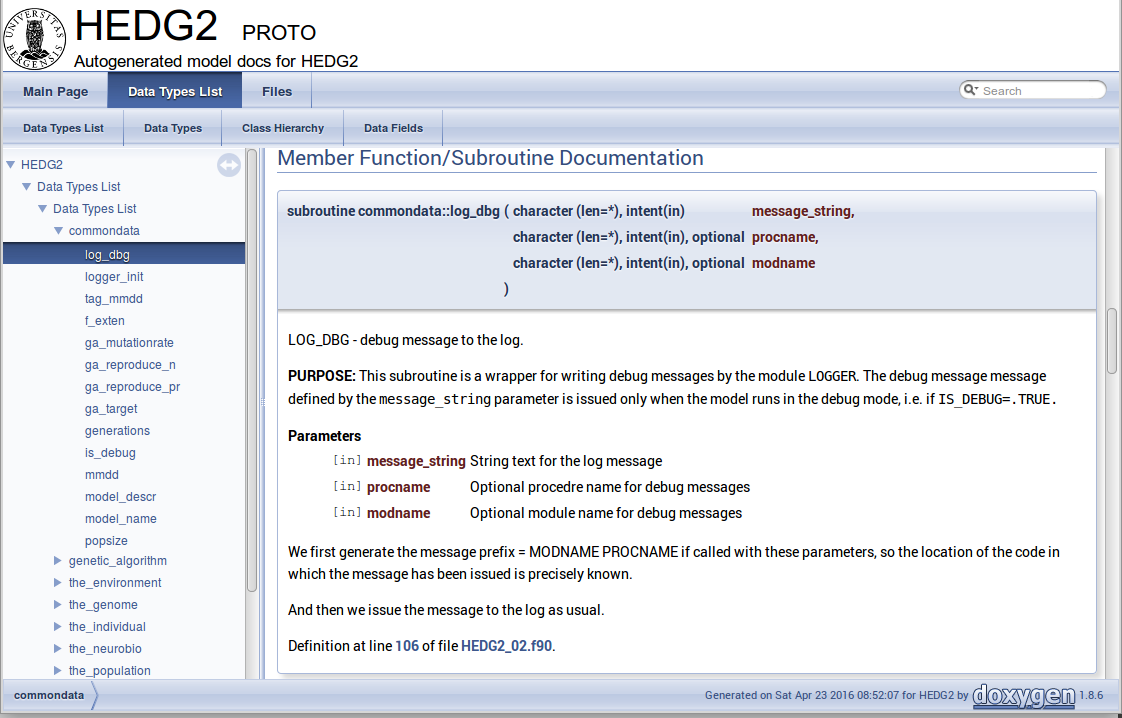

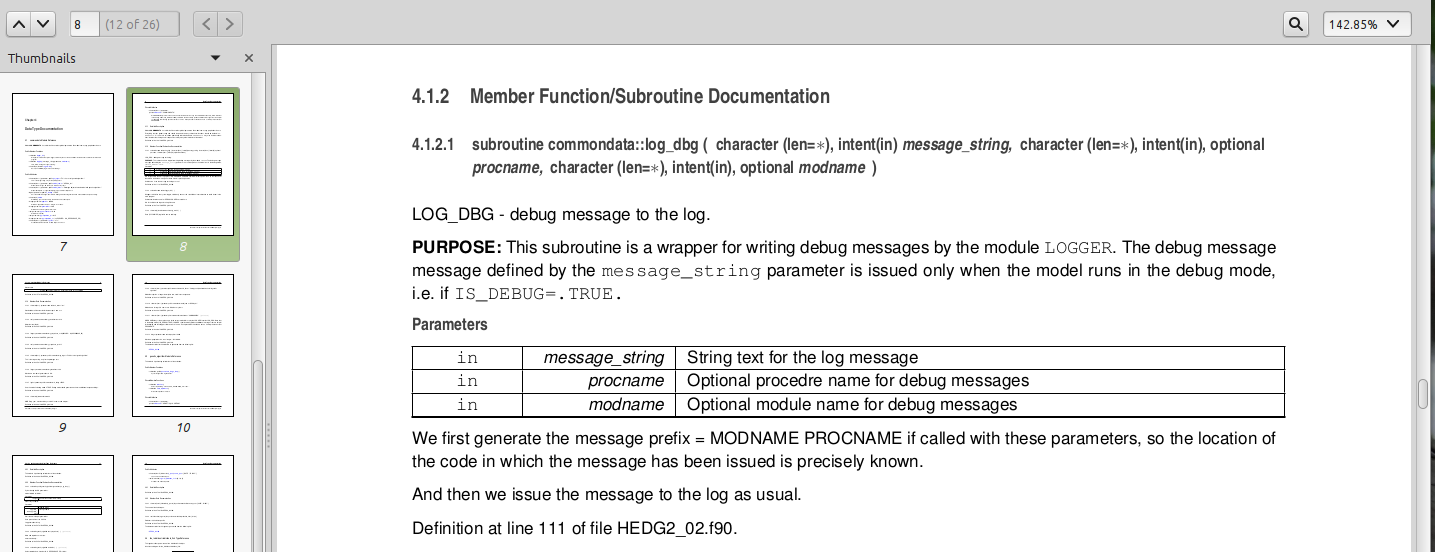

Doxygen parses the source code and produces highly structured documentation in different formats (e.g. html, rtf, latex, pdf etc.).

There are different options to generate HTML documents. For example, a bundle of HTML files with images , cross-references, code syntax highlighting and search functionality can be prepared. Alternatively, a single simpler HTML file can be done. LaTex output can be converted to PDF with references and index.

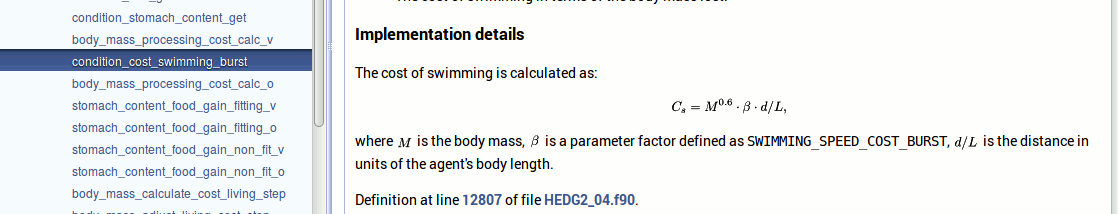

Examples of HTML and PDF outputs are below.

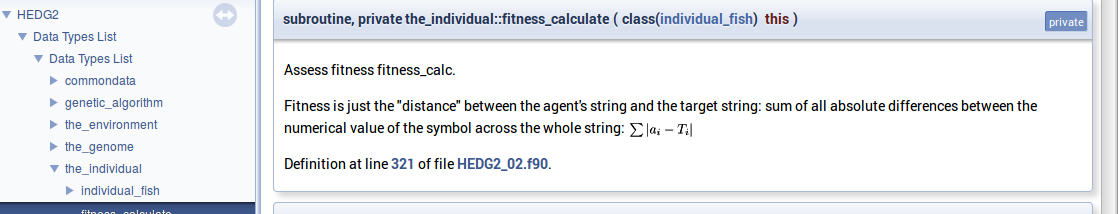

Here is an example of LaTeX formula in the autogenerated documentation file. Note that formulas are delimited with @f$ on both sides.

!> Fitness is just the "distance" between the agent's string and the target

!! string: sum of all absolute differences between the numerical value of

!! the symbol across the whole string: @f$ \sum |a_i - T_i| @f$

this%fitness = sum([(abs(iachar(this%str(i:i)) - iachar(GA_TARGET(i:i))), &

i = 1, len(GA_TARGET))])

This is rendered as follows:

To make the formula appear on a separate line, delimit it within @f[ and @f].

!> ### Implementation details ###

!> The cost of swimming is calculated as:

!! @f[ C_{s} = M^{0.6} \cdot \beta \cdot d / L , @f] where

!! @f$ M @f$ is the body mass, @f$ \beta @f$ is a parameter factor

!! defined as `SWIMMING_SPEED_COST_BURST`, @f$ d / L @f$ is the distance

!! in units of the agent's body length.

cost_swimming = this%body_mass**SWIM_COST_EXP * SWIMMING_SPEED_COST_BURST &

* distance / this%body_length

|

|

LaTeX, dvips and Ghostscript should be installed for the formula rendering to work correctly. There are web-based LaTeX equation editors, e.g. https://www.codecogs.com/latex/eqneditor.php |

Documenting a complex model is very important! It is also not really difficult, but requires some additional discipline. It is much easier to include Doxygen comments as you write the model code than to look through the whole (huge) amount of the code a month later just to recall what the code is actually doing. Thus, the model becomes much more understandable to the level of its finest details. And Doxygen allows inclusion of various markup commands and styles, LaTeX formulas and graphics. Doxygen documentation, faq’s and howtos are available here: http://doxygen.org

In the AHA GNU make system used to build the executables, documentation is generated using this simple command:

make docs

4. Version control: Subversion (SVN)

AHA Repository: https://tegsvn.uib.no/svn/tegsvn/

AHA Tools stable version (v1.1): https://tegsvn.uib.no/svn/tegsvn/tags/HEDTOOLS/1.1

4.1. Main terms

-

Working copy: the local file system directory that keeps the files synced with the Subversion server: your local copy of the code.

-

Checkout: download the files from the Subversion server initially, this sets up all the necessary data and configuration within the chosen working copy. Checkout is done only once.

-

Update: get the files with the latest changes from the Subversion server to the local file system directory (load). Files that you have changed locally and not yet committed to the server are kept intact, so your changes are never silently overwritten. To cancel all local changes use revert.

-

Commit: save the local changes to the files in your working copy to the Subversion server.

4.2. Overview

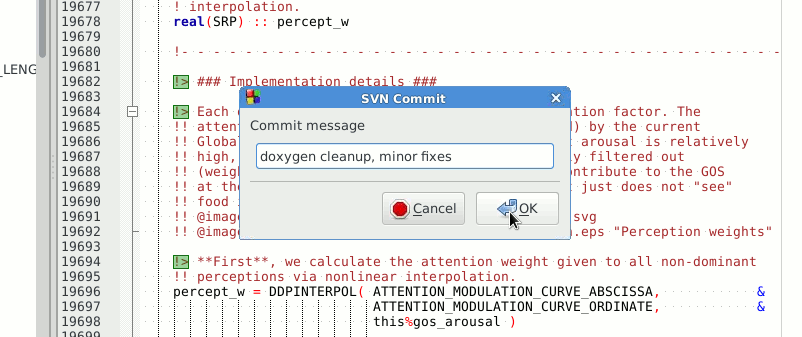

Subversion (SVN) is a version control system used in the AHA project. Use version control not only for just managing versions, but also for organising your coding. Every new code commit should ideally be a specific task, function or logical workflow unit. And the commit message should reflect this task.

For example, it would be perfect to commit changes in pieces involving implementation of a specific function in the model or to correct a specific bug. Use the log messages to describe briefly what has been done.

The usefulness of the whole version control workflow is limited if the commit pattern is haphazard and any single commit involves different kinds of code changes in many different places. It will be, for example, very difficult to revert from a single change that have previously introduced a bug. Revision history is a very valuable component of the development process!

If several people are working on the same piece of code, it is important to make commits frequently. Also frequently integrate others' changes. Otherwise, there is an increasing change to get version conflicts that have to be solved manually.

|

|

Never use the standard tools provided by the operating system (e.g. Windows Explorer) to delete/copy/move/rename files managed by Subversion. Always use the Subversion for all file operations. |

|

|

Always try to commit some logically integrated piece of code rather than do it haphazardly. Write informative commit messages. Commit changes frequently. |

The examples below assume you use a terminal console, but most SVN commands can also be easily performed from various GUI tools.

For example, imagine you add a neural response function. Commit the change, as soon as it is ready then (with log message like "Added general neural response function for neural bundles"). Go to the next logical piece of the work (e.g. fixing gamma2gene) afteer this commit and again commit this change when more or less ready (i.e. go to the next step only after you have commited the current changes). Then the versions you have will be organised into meaningful pieces:

svn commit model1.f90 -m "Added general neural response function for neural bundles"

.....

svn commit model1.f90 -m "Fixed gamma2gene function, Gaussian perception error"

A typical SVN repository organisation usually includes many branches for different purposes created by different developers. For example, the current TEG svn repo has this structure:

|-- branches # Private development branches

| |-- budaev

| |-- gabby

| |-- henrik

| `-- judy

|-- hormones_v2 # The hormone models and other models (below)

| |-- branches_camilla

| |-- branches_jacqueline

| |-- stochastic_trunk

| |-- stochastic_trunk_mortality

| |-- stochastic_trunk_mortality (a & b)

| |-- stochastic_trunk_mortality (a & b) - TEST

| |-- tests

| `-- trunk

|-- mesopelagic_latitude

| |-- branches

| |-- mesopelagic_barrier

| |-- mesopelagic_lifehistory

| `-- trunk

|-- nansen_seaice

| `-- trunk

|-- pacificherring

| |-- branches

| `-- trunk

|-- sexevolution

| `-- testingsexevolutionfortran

|-- tags # General-purpose modelling components (versions)

| |-- AHA_R

| |-- BINARY_IO

| |-- CSV_IO

| |-- HEDTOOLS

| |-- LINEARFIT

| |-- QSORT

| |-- UPTAKEMM

| `-- VISRANGE

`-- trunk # Accessory components (e.g. web server config)

|-- AHAWEB

|-- BOOKS

|-- DOCS

|-- scripts

`-- SVNSERVER

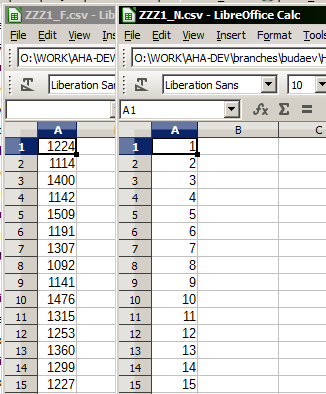

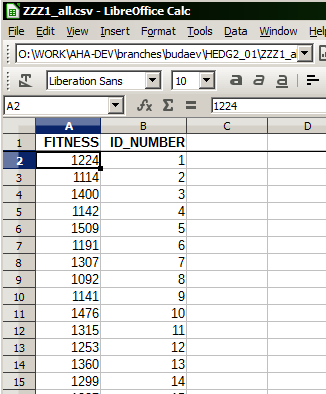

The HEDTOOLS folder itself has the following structure

`-- HEDTOOLS # Main place for the source files

|-- doc # Documentation for HEDTOOLS

|-- IEEE # Non-intrinsic IEEE math modules

`-- template # Templates for user Makefile's and

# HPC cluster batch scripts

Nonetheless, Subversion does not impose any limitations on the structure of directories and folders. The main advantages of Subversion over other version control systems is its simplicity and safety. One needs to know only a few commands (which can be called via graphical user interfaces) and it is virtually impossible to damage the data and code once it has gone to the server.

4.3. First time setup of the working copy

|

|

AHA Tools in (release 1.1) can be found here:

https://tegsvn.uib.no/svn/tegsvn/tags/HEDTOOLS/1.1;

Development versions are here:

https://tegsvn.uib.no/svn/tegsvn/branches/budaev/HEDTOOLS/.

So standard checkout (the stable version) is like this: svn co https://tegsvn.uib.no/svn/tegsvn/tags/HEDTOOLS/1.1 HEDTOOLS |

4.3.1. Command line tool

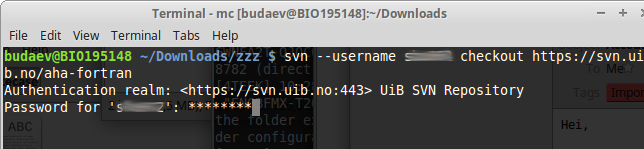

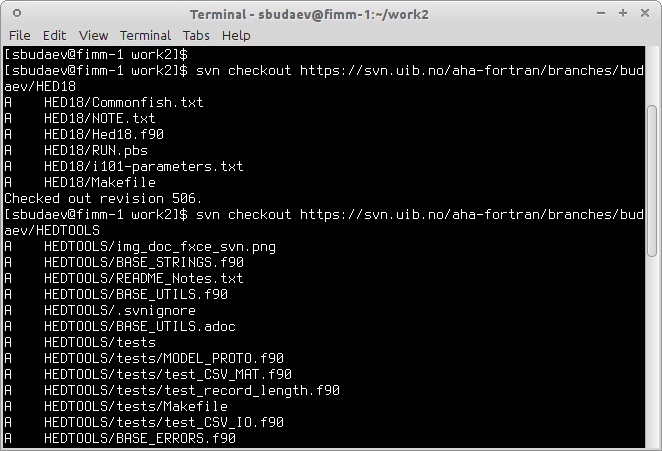

First time setup of the working copy of the model (working directory):

-

For a new project (run/experiment etc.), get into the working directory where the model code will reside (cd) (possibly make a new directory mkdir), and checkout: get the model code (one branch, no need to get everything!) from the server with svn checkout https://path_to_branch. When a specific repository is used for the first time, you should also include the user name for this repository (--username your_user_name) and then the program asks for the password. SVN server name, username and password is then saved, so subsequently it is not necessary to state the username/password you connect to the same SVN server from the same workstation. For example, first time checkout (for user u01):

svn --username u01 checkout https://tegsvn.uib.no/svn/tegsvn/branches/budaev/HED18

next, just this should work:

svn checkout https://tegsvn.uib.no/svn/tegsvn/branches/budaev/HED18

This will get the HED18 into the directory HED18 within the current working directory. If we use HEDTOOLS, it should also be placed here:

svn checkout https://tegsvn.uib.no/svn/tegsvn/branches/budaev/HED18

...

svn checkout https://tegsvn.uib.no/svn/tegsvn/branches/budaev/HEDTOOLS

So, we now get HED18 and HEDTOOLS in our working directory.

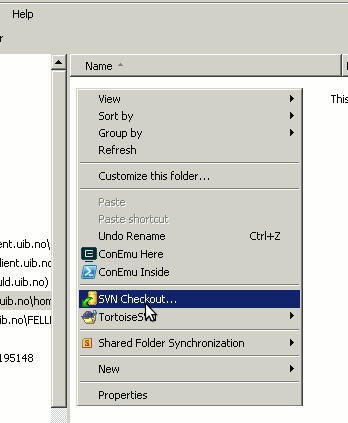

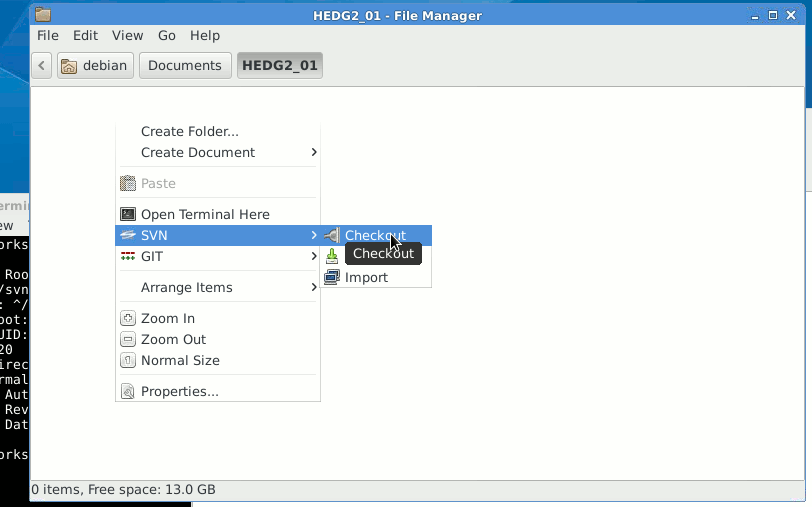

4.3.2. TortoiseSVN on Windows

-

Using the TortoiseSVN on Windows, initial setup is also simple.

First, choose some folder for keeping the working copies of the development files, open it in the Windows Explorer.

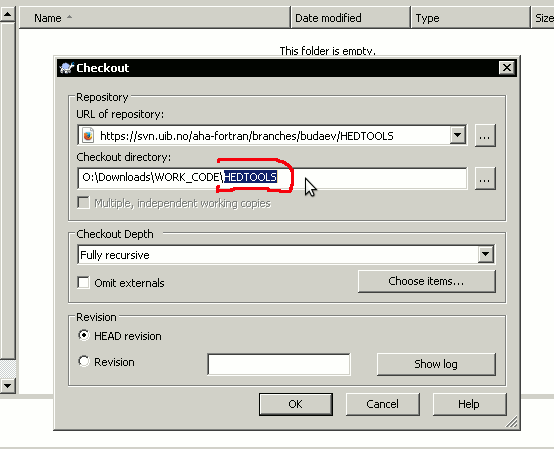

Then right-click somewhere within this folder, then choose TortoiseSVN and click Checkout. This will bring a window to enter the Subversion repository address. Now paste the address of the folder you are going to clone on the local machine. It is perhaps good to get the HEDTOOLS modelling tools initially as they are used anyway.

|

|

Unlike the command line client, TortoiseSVN by default clones to the repository directory into the current folder and does not create local folder with the same name as the remote one. |

It may therefore be necessary to retype the local directory name the same as the remote one:

Initially the system will also ask for the username and password.

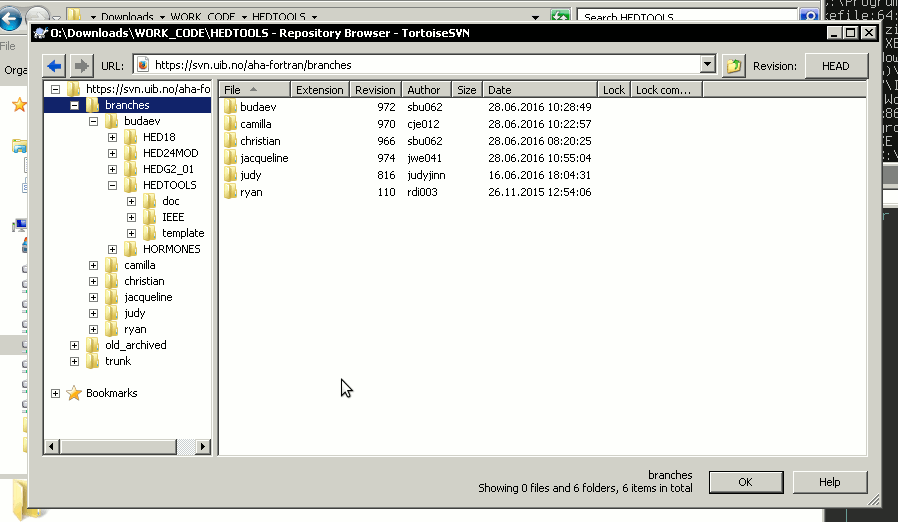

Repository browser that is called in the Checkout menu … button is a tool to explore the contents of the repository on the server. In Checkout menu it can be used to select the folder project to be cloned to the local machine. Also, using Repository browser you can make a private project directory on the server under /branches/your_name and then clone it to the local system using the Checkout menu.

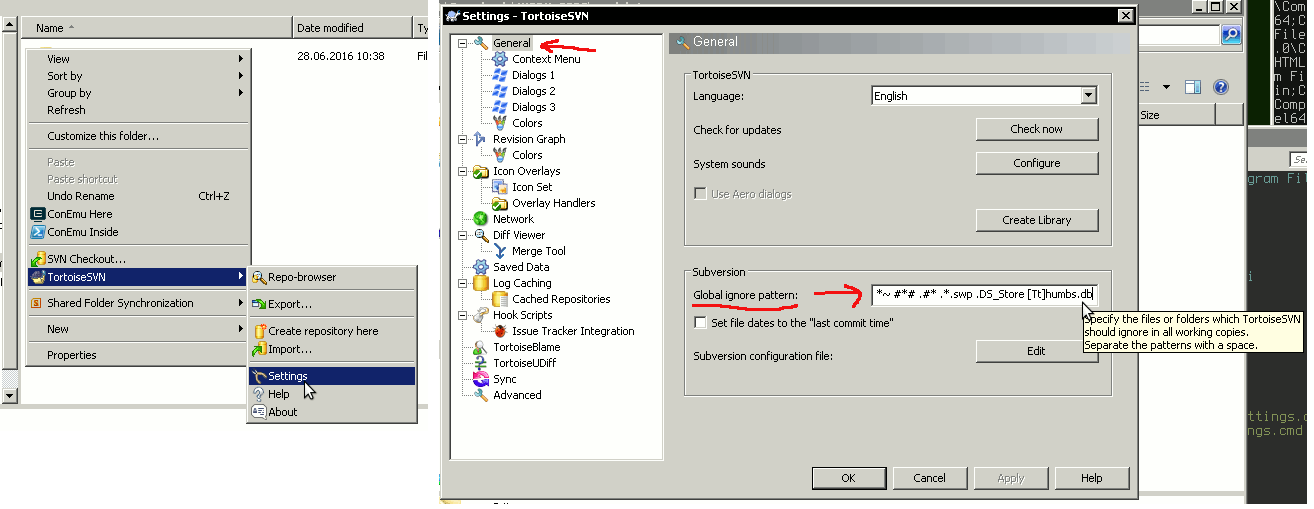

Alternatively, you can create project on the local machine first and use the menu item Import to import it to the repository. However, in the later case make sure you include only the Fortran (Matlab etc) program code into the Subversion and do not include the many accessory files created by the Microsoft Studio. They make clutter and are not needed in the versioning sytem. Use the TortoiseSVN → Settings → Ignore manu item for setting up ignore file patterns.

4.4. Standard workflow

Now you can work within this directory. This is the standard workflow.

-

update code from the server: svn up

-

edit the code using any favoured tools, build, run model etc…

-

diff (svn diff) to check what are the differences between the local file(s) or directory and those in the repository, to use specific visual diff tool use --diff-cmd diff_tool.

-

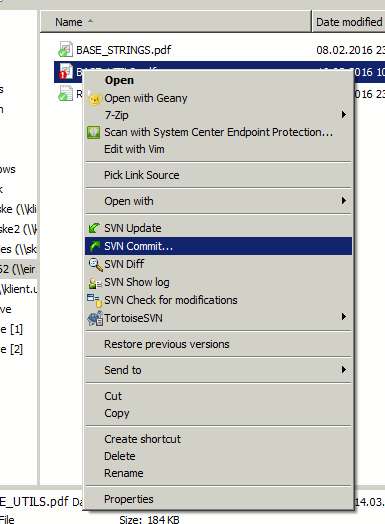

commit when ready (e.g. when a new piece of code has been implememnted): svn commit

commit will ask you to provide a short descriptive log message. It will run the standard text editor for this by default (can be configured). But you can provide such a message just on the command line with the -m option:

svn commit Hed18.f90 -m "New sigmoid function"

Both update and commit can be done for the working directory as well as for specific file. E.g. to commit only the model code Hed18.f90 do:

svn commit Hed18.f90

Both update and commit can be performed within any subdirectory of the working copy. In such cases they are limited to this subdirectory only.

4.5. Log of changes

The svn log command will issue the list of log messages, by default in the reverse order (the most recent logs go first), so the development progress is seen. The log messages can be filtered by date, revision number etc. Check out svn help log.

Example: To show only 5 most recent log messages for the specific file Hed18.f90 use such a command:

svn log -l 5 Hed18.f90

|

|

There is a small caveat with svn log. By default it shows log messages from the local working copy (not repository). So, if you did many commits lately but did not svn update, the latest messages will be absent from the log. So, do svn update! |

There is a useful utility svn2cl that generates standard GNU-style ChangeLog file. This utlity can be found in the standard Debian-based Linux repositories (subversion-tools). So, installation is trivial on Linux. Download it from the official site: svn2cl.

Example: The command below produces a slightly more concise daily log.

svn2cl --group-by-day

4.6. Using branches

A branch in Subversion is just a directory on the SVN server. It can be thought of in the same way as common file system directory/folder. Creating a new folder is easy:

# Making a new directory for old code -- use the mkdir command

svn mkdir https://tegsvn.uib.no/svn/tegsvn/old_archived

It is also easy to move or copy parts of the repository across the repository:

# Move a model branch to the archive folder -- use mv (move) command

svn mv https://tegsvn.uib.no/svn/tegsvn/trunk/model_20151013 \

https://tegsvn.uib.no/svn/tegsvn/old_archived/model_20151013

....

# Copy a file to another branch -- use cp (copy) command

svn cp https://tegsvn.uib.no/svn/tegsvn/trunk/hormones/Hormones.f90 \

https://tegsvn.uib.no/svn/tegsvn/branches/camilla/hormones/Hormones.f90

Do not forget to update the local working copy after deleting/moving/copying directories on the SVN server, then local copy will be in sync with the server.

4.6.1. Make a branch copying old code

The copy command is very useful to create a copy of some repository part to a separate branch. Then some new features or functions can be implemented in the branch and then reintegrated back to the parent project. Or an independent new model can be initialised in such a way.

Making a branch is easy, use svn copy source_svn_path destination_svn_path to do this. For example, the following command makes a copy of the whole sub-tree for the model code HED18 from user budaev private branch to the user natasha private branch. Now natasha can work on her own copy of the code and, when done, merge the changes back to budaev's code. Finally, budaev's (and natasha's) code can be reintegrated back to the trunk main line.

svn copy https://tegsvn.uib.no/svn/tegsvn/branches/budaev/HED18 \

https://tegsvn.uib.no/svn/tegsvn/branches/natasha/HED18 \

-m "Creating private branch."

4.6.2. Merge changes between branches

If several people are simultaneously working on the project, it make sense to merge changes from the parent branch back to the current branch (e.g. from trunk to budaev and natasha). Thus does not allow the code to diverge too far and reduces the chances to get version conflicts. Merging ongoing changes from the parent project is easy. For example, the following will merge changes from trunk back to the current branch (note that ^ substitutes the SVN repository web address):

svn merge ^/trunk/HEDTOOLS/

That is, with this syntax we have provided the source for merging (^/trunk/HEDTOOLS/) into the current directory.

Merge can be conducted in both ways (to and from different branches to keep them in sync). This is the main component in branch maintenance. And it is quite trivial. Make a branch — merge changes from trunk or another branch.

To undo a merge that has not yet been committed to the server, e.g. if it was done by mistake in a wrong directory, do this:

svn revert -R .

4.7. Other features

Keywords. Subversion has a very useful feature: you can set various properties (svn propset). For example, one can set tags on files or directories. A very interesting feature is that svn:keyword properties can be incorporated into the source files under SVN control. For example, you can include specific tags into the Fortran (or any other managed) source code so that they are updated automatically.

One user case for this is this. Define special $Id tag. This tag includes the file name, last changed revision number, revision date and time and the user who did the revision. This is how it will appear in the source code:

! The comment below incorporates SVN revision ID, it should apparently be

! inserted into a comment, so does not affect the compiler:

! $Id: HEDTOOLS.adoc 14226 2023-06-18 09:33:07Z sbu062 $

! other code follows...

......

implicit none

...

To set up this tag we just have to issue such command:

svn propset svn:keywords "Id" file_name_to_set_keyword.f90

and include two strings $Id anything in between initially $ in this source text to set where the keywords should be placed. Obviously, we have to commit change to the server after this. From now on, the information will be updated automatically between the $Id … and $ symbols. So the source code itself will have comments indicating the revision number etc. There are many useful tags that can be placed in such a way. For example $Date $Revision $HeadURL $LastChangedDate. If several tags should be placed, one can set up several keywords for a particular file:

svn propset svn:keywords "Id Date Revision HeadURL LastChangedDate" file_name.f90

Check out full documentation in the SVN manual about propset and svn:keyword.

|

|

Subversion keywords are case sensitive, so $ID or $id won’t work. |

Change Subversion main repository address. If the main svn repository address is changed for some reason, svn relocate command is useful:

svn relocate --username user_name https://tegsvn.uib.no/svn/tegsvn/

TortoiseSVN client on Windows has a Relocate menu item under TortoiseSVN.

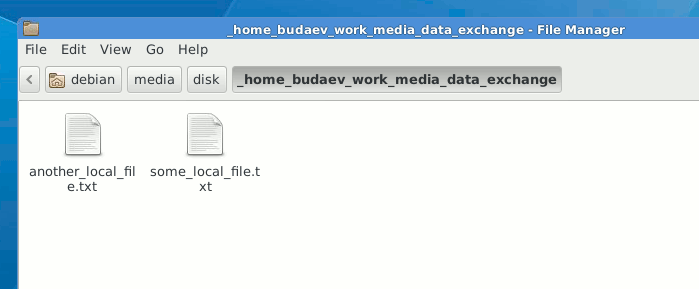

WebDAV access. It is possible to access the Subversion repository using the standard WebDAV protocol (https://) as a virtual folder without installing any client software. WebDAV is supported by most operating systems, including Windows and Linux. On Windows, use the "Map network drive"" menu to establish connection to the server. On Linux, just place such an address in the file manager ("Ctrl L" may be required to go to the address line): davs://tegsvn.uib.no/svn/tegsvn/

4.8. GUI Tools

Using the GUI tools like TortoiseSVN is similar to using the terminal commands. With GUI you should just select the appropriate item from the menu list.

Initial setup for the repository in TortoiseSVN is simple.

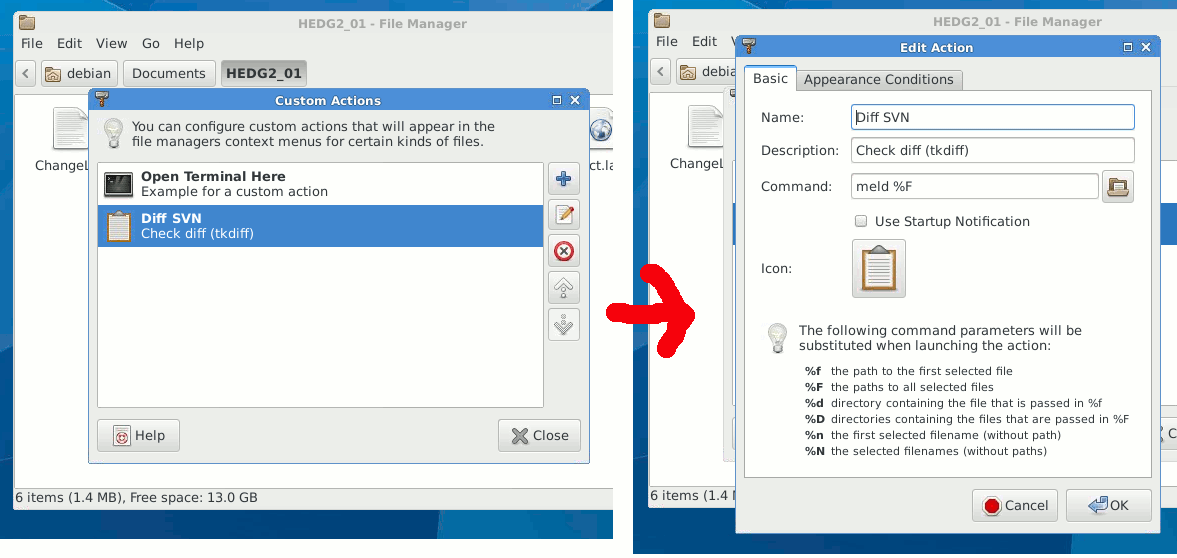

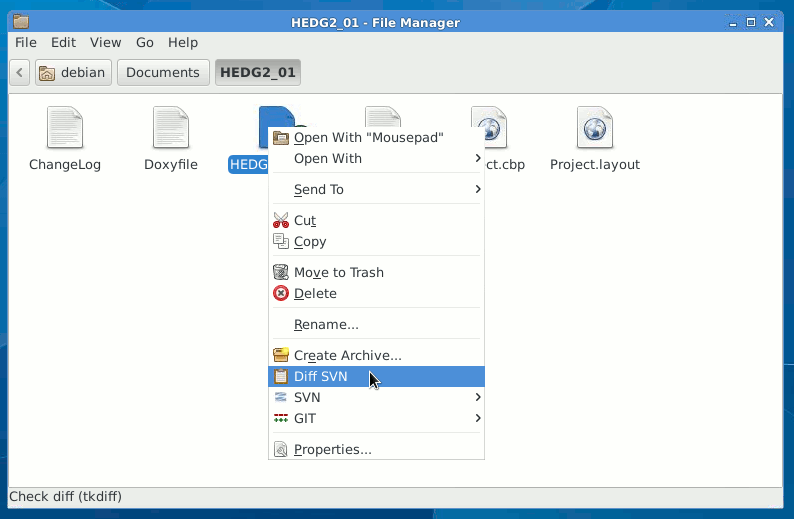

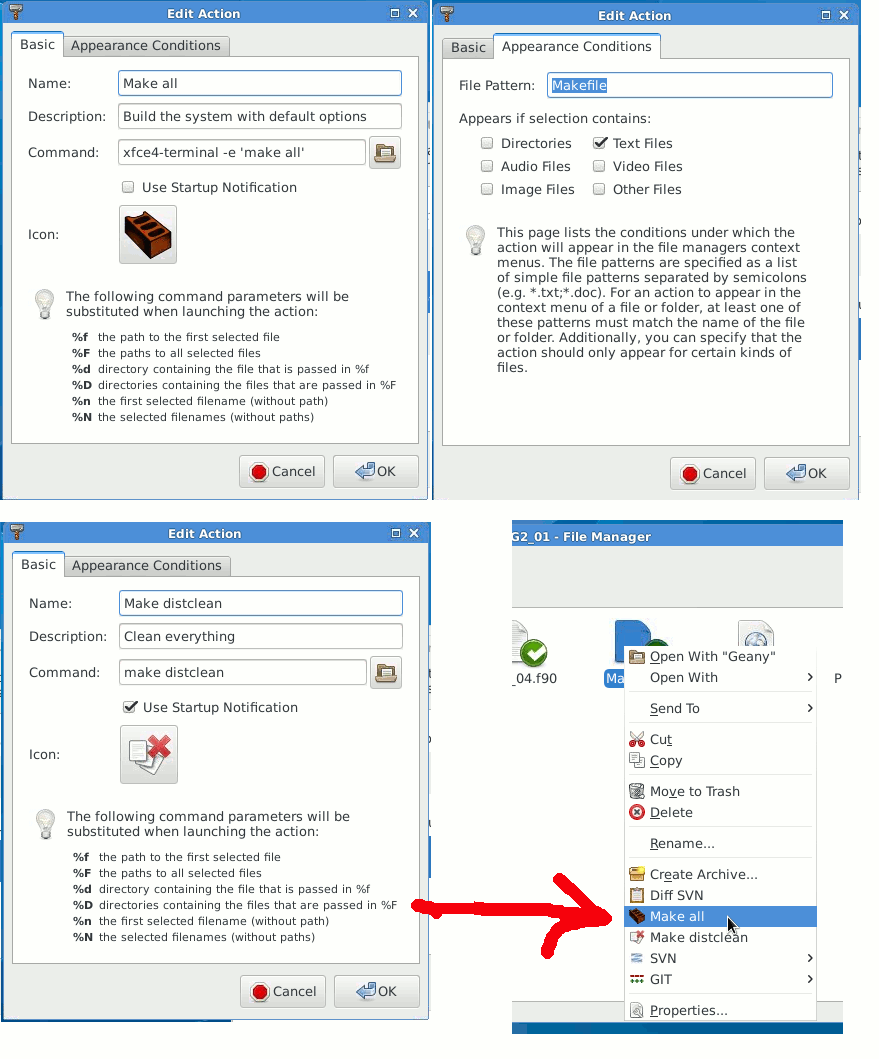

Checking changes, diff-ing, setting properties and keywords etc. is also very easy and visual with the built-in tool. Another useful feature is the revision graphs showing sequence of versions and pattern of branching. TortoiseSVN is incorporated into the Windows explorer and uses small overlay icons to show the status of the files and directories.

Similar GUI tools, although not as mature as TortoiseSVN, exist for Linux. For example, there is thunar-vcs-plugin (Git and Subversion integration into the Thunar file manager).

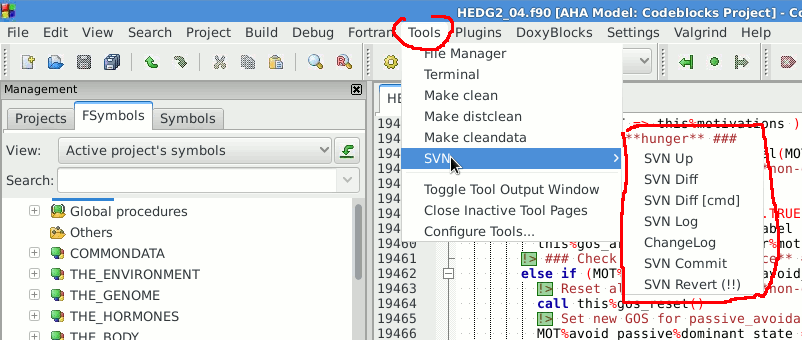

Subversion also integrates with numerous other tools, e.g. there is an SVN plugin for the Geany editor (GeanyVC), plugins for the Microsoft Visual Studio IDE etc.

For example, AnkhSVN is a nice free tool integrating Subversion into Microsoft Visual Studio.

Do not forget that version control systems are not only for just program code but any text-based files. So writing papers in LaTeX benefits from a built-in Subversion support in the TexStudio.

5. Object-oriented programming and modelling

5.1. General principles

Modern Fortran (F2003 and F2008 standards) allows coding in a truely object-oriented style. Object oriented style allows to define user’s abstractions that mimic real world objects, isolate extra complexity of the objects and create extensions of objects.

Object oriented programming is based on the following principles:

-

Abstraction: defining and abstracting common features of objects and functions.

-

Modularity and hiding irrelevant information: An object is written and treated separately from other objects. Details about internal functioning of the object are effectively hidden, what is important is the interface of the object, i.e. how it interacts with the external world. This reduces complexity.

-

Encapsulation: combining components of the object to create a new object.

-

Inheritance: components of objects (both data and functions) can be inherited across objects, e.g. properties the "genome" object inherited by a more general object "the individual."

-

Polymorphism: the provision of a single interface to objects of different types.

5.2. Simple basics

Stated simply, the object-oriented programming paradigm is based on the notion of object. Here object is an entity that integrates data and procedures that are implemented to manipulate these data. In the simplest case, data can be considered as the "properties"" or "attributes" that describe the object. Procedures that are linked with the object, on the other hand, provide other derived attributes of the object or describe what the object can "do".

Different objects can be arranged in various ways (e.g. form more complex objects like arrays). For instance a population of agents (another object) can be simply formed by arranging individual agents (other objects) into an array. Various agents can also interact with each other.

For example, a single "agent" object is an entity having such attributes as sex, spatial position, body mass, body length etc. It can also have such boolean attributes as "is alive" (true or false). For any such object, one can calculate instantaneous risk of predation and other transient derived properties. Also, the agent can interact with objects of various other kinds. For example, an agent can change its spatial position (its position attribute is changed), approach a food item and "eat" it (basically, absorb the mass attribute of the item, the item is destroyed thereafter). Agent can also do many other things, e.g. "die". The functions that are linked to the object are usually called methods.

When an instance of the object is created, it is initialised in a function (e.g. init) that is often called the constructor. Another procedure is sometimes implemented to destroy and deallocate the object, it is the destructor.

5.3. Type-bound procedures

Object-oriented code in modern Fortran is based on what is called type-bound procedures.

Briefly, a derived type is declared using the type keyword; it can contain several intrinsic and other derived types. Thus, a data structure is implemented.

type, public :: SPATIAL_POINT

real(SRP) :: x, y, depth

character(len=LABEL_LENGTH) :: label

....

end type SPATIAL_POINT

A procedure can then be declared that operates specifically on this derived type.

-

The first parameter this refers to the object that the procedure operates on.

-

The base object this is declared as class in the procedure, which allows to accept any extension of the this object as the first parameter. This is called "polymorphic objects."

Note that the other parameters (non this) can be declared as class or as type. In the former case, the procedure could accept any extensions (the procedure is then polymorphic) of the object, while in the latter, only this specific type (non-polymorphic procedure).

Components of the object are separated from its name with the percent sign %, e.g. the x coordinate is this%x.

function spatial_distance_3d (this, other) result (distance_euclidean)

class(SPATIAL_POINT), intent(in) :: this

real(SRP) :: distance_euclidean

class(SPATIAL_POINT), intent(in) :: other

distance_euclidean = dist( [this%x, this%y, this%depth], &

[other%x, other%y, other%depth] )

end function spatial_distance_3d

The procedure is then included into the derived type declaration.

The name of the procedure that is implemented (e.g. spatial_distance_3d in the example above) is not directly called in calculations and can be declared private. Instead, a public interface name is declared in the derived type that defines how the procedure should be called, in the example below it is distance.

Note that the interface name can coincide for several different objects, however the actual procedure name (spatial_distance_3d) must be unique within the module that defines the derived type and its procedures.

type, public :: SPATIAL_POINT

real(SRP) :: x, y, depth

character(len=LABEL_LENGTH) :: label

....

contains

procedure, public :: distance => spatial_distance_3d

....

end type SPATIAL_POINT

Now, the procedure is called for the specific instance of the object (it comes to the procedure as the this first "self" parameter) using the public interface name (distance) rather than the "actual" procedure name (spatial_distance_3d).

type(SPATIAL_POINT) :: point_a, point_b

...

distance_between_points = point_a%distance( point_b ) ! use public interface

An extension object can be declared, using the extends keyword, that will use all the properties and type-bound procedures of the base object and add its own additional ones. All the data attributes (x, y, depth) of the base class SPATIAL_POINT are now defined (inherited) also for the new derived type SPATIAL_MOVING. Additionally, the new type can define new properties ans add new type-bound procedures.

This allows creating complex inheritance hierarchies across objects.

type, public, extends(SPATIAL_POINT) :: SPATIAL_MOVING

! The following component adds an array of history of the object

! movements:

type(SPATIAL_POINT), dimension(HISTORY_SIZE_SPATIAL) :: history

...

contains

....

procedure, public :: go_up => spatial_moving_go_up

procedure, public :: go_down => spatial_moving_go_down

....

end type SPATIAL_MOVING

It is also possible to redefine the type-bound procedures for the new derived type. For example, a subroutine init can be defined for the base type SPATIAL_POINT that sets the default x, y and depth. A different type-bound procedure with the same public interface init defined for the SPATIAL_MOVING extended type can then set the default x, y and depth and, in addition, a default move. When such init procedure is called, the result of the computation is based on the exact nature of the object on which the procedure is executed. This is called procedure overloading for a polymorphic object.

call instance_object%init()

-

If the instance_object is SPATIAL_POINT, the init procedure defined for SPATIAL_POINT is executed on the object;

-

if the instance_object is SPATIAL_MOVING, the init procedure defined for SPATIAL_MOVING is executed.

5.4. Module structure

The structure of a module that defines an inheritance hierarchy of objects and their type-bound functions is like this. The module skeleton below implements also two init procedures such that spatial_moving_init overloads the spatial_init.

module SPATIAL_OBJECTS

! Declarations of objects:

type, public :: SPATIAL_POINT

real(SRP) :: x, y, depth

character(len=LABEL_LENGTH) :: label

....

contains

procedure, public :: init => spatial_init

procedure, public :: distance => spatial_distance_3d

....

end type SPATIAL_POINT

....

type, public, extends(SPATIAL_POINT) :: SPATIAL_MOVING

! The following component adds an array of history of the object

! movements:

type(SPATIAL_POINT), dimension(HISTORY_SIZE_SPATIAL) :: history

...

contains

....

procedure, public :: init => spatial_moving_init

procedure, public :: go_up => spatial_moving_go_up

procedure, public :: go_down => spatial_moving_go_down

....

end type SPATIAL_MOVING

.....

! other declarations

.....

contains

! Here go all the procedures declared in this module

function spatial_distance_3d (this, other) result (distance_euclidean)

class(SPATIAL_POINT), intent(in) :: this

real(SRP) :: distance_euclidean

class(SPATIAL_POINT), intent(in) :: other

distance_euclidean = dist( [this%x, this%y, this%depth], &

[other%x, other%y, other%depth] )

end function spatial_distance_3d

subroutine spatial_init(this)

class(SPATIAL_POINT), intent(inout) :: this

....

end subroutine spatial_init

subroutine spatial_moving_init(this)

class(SPATIAL_MOVING), intent(inout) :: this

....

end subroutine spatial_init

! Any other procedures

..........

end module SPATIAL_OBJECTS

An object or several related objects (derived types) together with their type-bound procedures are defined within the same Fortran module.

5.5. Class diagram

Relationships between different objects (classes) can be represented graphically in a class diagram. Here, a class (derived type) is represented by a box with a title that gives its name. The relationships are then depicted by several types of lines and arrows that connect these boxes.

The simplest and most widespread symbols in a class diagram are presented below.

-

inheritance shows which class is the base class and which is its extension;

-

aggregation indicates that several objects are "assembled" to create a more complex composite object;

-

composition is a strong form of "aggregation" that points to a "part versus whole" relationship."

5.6. Arrays of objects

Components of a derived type are referred using the percent symbol %, e.g. agent%sex refers to a component sex of the object agent. Both data components and "methods" are referred in this way, although methods use parentheses (e.g. parents%individual%probability_capture()).

Derived type data objects can be combined into arrays as normal intrinsic type variables. For example, the sex component of the i-th element of the array of derived type agent is referred as agent(i)%sex.

If arrays are defined at several levels of the object hierarchy, it can create quite a complex structure:

population%individual(i)%chromosome(j,k)%allele(l)%allele_value(m)

5.7. Implementation of objects

The above declarations just define an object. To use the object, we must instantiate it, i.e. create its specific instance and give it a value. This is analogous to having a specific data type, e.g. integer. We cannot use "just an integer," we need (1) to create a specific variable (variable is also an object though trivial!) of the type integer (e.g. integer :: Var_A) and (2) to assign a specific value to it (Var_A=1).

For example, the following creates two instance arrays of the type INDIVIDUAL_FISH. Both arrays are one-dimensional and have POPSIZE elements. So we now have two fish populations, generation_one and generation_two. Each individual value of such an array, e.g. generation_one(1) is an instance of the object of the type INDIVIDUAL_FISH that can be quite a complex data structure including many different data types, even arrays and lower-order derived types. So, instead of being arrays of simple values these object arrays are in fact arrays of complex data structures potentially consisting of many different data types and arrays:

type(INDIVIDUAL_FISH), dimension(POPSIZE) :: generation_one

type(INDIVIDUAL_FISH), dimension(POPSIZE) :: generation_two

We can now assign concrete values to each of the previously defined components of generation_one array, e.g.

generation_one(i)%sex = "male" ! assign values to individual components

generation_one(i)%alive = .true. ! of the object instance

generation_one(i)%food(j) = "spaghetti"

We can also use the subroutines and type-bound functions that we have defined within the object definitions to do specific manipulations on the object and its components:

subroutine population_init()

....

do i = 1, POPSIZE

call generation_one(i)%init() ! Initialise the i-th fish object in the

end do ! "generation_one" population array

! using the object-bound subroutine init

end subroutine population_init

5.8. A trivial example: Stopwatch object

Here is a trivial example implementing a stopwatch object — TIMER_CPU. The comments in the code are self-explanatory.

!> Here we define CPU timer container object for debugging and

!! speed/performance control. Therefore we can instantiate arbitrary timers

!! for different parts of the code (and also global). "Class," so can extend.

!! Using a specific timer (`timer_general`)

!! is like this: `call stopwatch%start()` to start the stopwatch, then the

!! function `stopwatch%elapsed()` returns the elapsed time.

!! @note The near-trivial nature of this object makes it ideal for learning

!! how to implement objects. TODO: add to doc full implementation.

type, public :: TIMER_CPU

!> Define start time for the stopwatch.

!! @note We need to keep only the start time as raw values coming out

!! of `cpu_time` are machine-dependent

!! @note It does not seem good to move `TIMER_CPU` to *HEDTOOLS* as they

!! are for portability (require only F90) and do not use OO.

!! `TIMER_CPU` uses full OO extensible class implementation so

!! requires *F2003* minimum.

real(SRP) :: cpu_time_start

contains

procedure, public :: start => timer_cpu_start ! subroutine

procedure, public :: elapsed => timer_cpu_elapsed ! function

end type TIMER_CPU

....

....

!=============================================================================

! The two procedures below are for the CPU timer / stopwatch object

!-----------------------------------------------------------------------------

!> Start the timer object, stopwatch is now ON.

!! @note We do not need exact low-level time as it is machine-specific.

subroutine timer_cpu_start(this)

class(TIMER_CPU) :: this

!> this turns on the CPU stopwatch

call cpu_time(this%cpu_time_start)

end subroutine timer_cpu_start

!-----------------------------------------------------------------------------

!> Calculate the time elapsed since the stopwatch subroutine was called

!! for this instance of the timer container object. Can be called several

!! times showing elapsed time since the grand start.

function timer_cpu_elapsed (this) result (cpu_time_elapsed)

class(TIMER_CPU) :: this

!> @returns the time elapsed since `timer_cpu_start` call (object-bound).

real(SRP) :: cpu_time_elapsed

! Local var

real(SRP) :: cpu_time_finish

!> We use the intrinsic `cpu_time` to get the finish time point.

call cpu_time(cpu_time_finish)

!> Elapsed time is then trivial to get.

cpu_time_elapsed = cpu_time_finish - this%cpu_time_start

end function timer_cpu_elapsed

Declarations for the instantiation of such an object look like this:

!> This is the stopwatch objects for global and for timing each generation

type(TIMER_CPU) :: stopwatch_global, stopwatch_generation

The use of the stopwatch objects is now rather simple:

! Start global stopwatch

call stopwatch_global%start()

....

....

! Print elapsed time in the log message;

! check out the function stopwatch_global%elapsed() that actually gets

! the elapsed time:

call LOG_DBG("Initialisation of generation one completed, took " // &

TOSTR(stopwatch_global%elapsed(), "(f8.4)") // &

"s since global procedure start.")

5.9. More information

Below are some books that should be referred for more information on object-oriented programming in modern Fortran.

-

Adams, J. C., et al., (2009). The Fortran 2003 Handbook. Springer.

-

Akin, E. (2003). Object-Oriented Programming via Fortran 90/95. Cambridge University Press.

-

Brainerd, W. S. (2015). Guide to Fortran 2008 Programming. Springer.

-

Chapman, S. J. (2007). Fortran 95/2003 for Scientists and Engineers. McGraw-Hill.

-

Clerman, N. S., & Spector, W. (2012). Modern Fortran: Style and usage. Cambridge University Press.

6. Introduction to the AHA Fortran modules

6.1. Overview of AHA modules

The modelling framework is build on these principles: (1) modularity, (2) extensibility, (3) portability.

The Modelling framework is composed of two separate components: (1) HEDTOOLS, modelling utilities and tools (implemented as portable Fortran modules, not object-oriented) that have general applicability and are used for data conversion, output, random number generation and execution logging. HEDTOOLS modules are designed such that they can be used in many different simulation projects, not only the AHA model; (2) The AHA model, an object oriented evolutionary agents simulation framework implementing standard reusable module components.

HEDTOOLS:

-

Module BASE_UTILS — utility functions.

-

Module CSV_IO — Data output in CSV (comma separated values) format.

-

Module BASE_RANDOM — Utilities for random number generation.

-

Module LOGGER — Logging facility.

-

Module BASE_STRINGS — String manipulation utilities.

-

Non-intrinsic IEEE modules — implement IEEE arithmetic checks and exceptions tracking.

The AHA Model

-

Module COMMONDATA Setting common parameters for the model.

-

Module THE_GENOME Implementation of the genome objects, gene, alleles, chromosomes.

-

Module THE_HORMONES Architecture of the hormones and their functions.

-

Module THE_NEUROBIO Implements neurobiological architectures based on sigmoid function, decision making and GOS.

-

Module THE_INDIVIDUAL Implements the individual agent in the final form and the individual-based model functions.

-

Module THE_POPULATION Implements the population(s) of agents.

-

Module THE_ENVIRONMENT Implements the environment and its variation.

-

Module THE_EVOLUTION Implements the genetic algorithm.

|

|

Solaris Studio Fortran compiler f95 v. 12.4 does not support all object-oriented features (most probably the type-bound functions and polymorphic classes) of the Fortran 2003 standard and does not compile the AHA model code issuing this error: f90: Internal Error, code=fw-interface-ctyp1-796. Though, it does compile the more portable non-object-oriented HEDTOOLS modules without issues. It is believed that the next major release of Oracle Studio will include full support of these Fortran features. Recent Intel and GNU compilers work as expected with all object-oriented code. |

6.2. Modules in Fortran

Module is just a piece of Fortran program that contains variable or constant declarations and functions and subroutines. Modules are defined in such a simple way:

module SOME_MODULE

character (len=*), private, parameter :: text_string = "its value"

integer :: some_variable

real, dimension(:)

contains ! subroutines and functions go after "contains"

subroutine SOME_SUBROUTINE(parameters)

...

end subroutine SOME_SUBROUTINE

end module SOME_MODULE

To use any variable/constant/subroutine/function from the module, the program must include the use MODULE_NAME statement:

use SOME_MODULE

....

Invoking the modules requires the use keyword in Fortran. use should normally be the first statements before implicit none:

program TEST

use BASE_UTILS ! Invoke the modules

use CSV_IO ! into this program

implicit none

character (len=255) :: REC

integer :: i

real, dimension(6) :: RARR = [0.1,0.2,0.3,0.4,0.5,0.6]

character (len=4), dimension(6) :: STARR=["a1","a2","a3","a4","a5","a6"]

..........

end program TEST

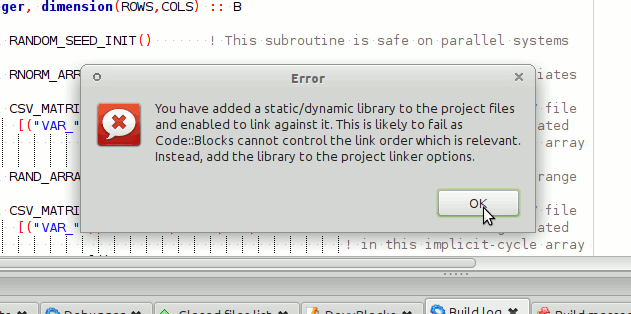

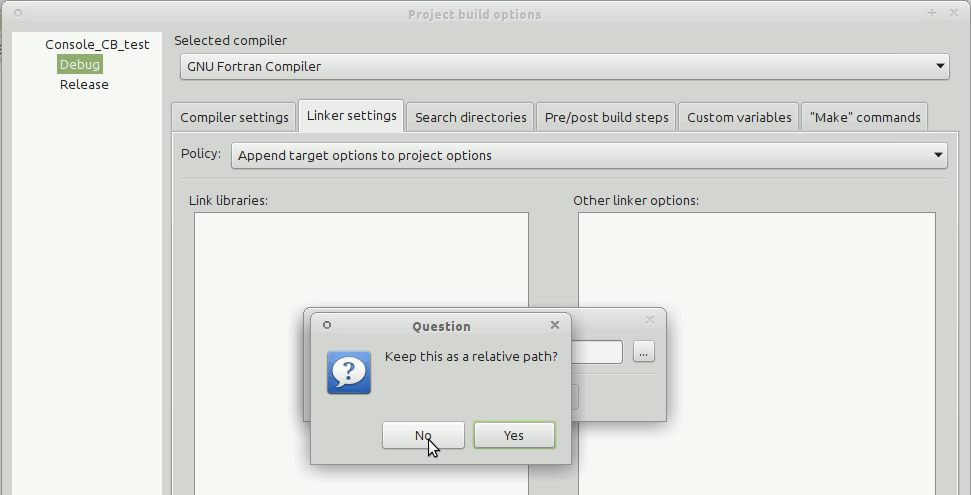

Building the program with these modules using the command line is normally a two-step process:

build the modules, e.g.

gfortran -g -c ../BASE_CSV_IO.f90 ../BASE_UTILS.f90

This step should only be done if the source code of the modules change, i.e. quite rarely.

build the program (e.g. TEST.f90) with these modules

gfortran -g -o TEST.exe TEST.f90 ../BASE_UTILS.f90 ../BASE_CSV_IO.f90

or for a generic F95 compiler:

f95 -g -c ../BASE_CSV_IO.f90 ../BASE_UTILS.f90

f95 -g -o TEST.exe TEST.f90 ../BASE_UTILS.f90 ../BASE_CSV_IO.f90

A static library of the modules could also be built, so the other more changeable code can be just linked with the library.

|

|

Note

The examples above assume that the module code is located in the upper-level directory, so ../. The make system used to build the model cares about the HEDTOOLS modules automatically. |

7. Module: BASE_UTILS

This module contains a few utility functions and subroutines. So far there are two useful things here: STDOUT, STDERR, TOSTR, CLEANUP, and RANDOM_SEED_INIT.

7.1. Function: TOSTR